The AI-coding landscape in 2025 isn’t short on hype. Tools like Cursor, Claude, Lovable, and Cosine all promise to speed up development. But for engineering teams, the real question isn’t “who generates code faster?” — it’s “which tool helps us ship faster, safer, and with fewer regressions?”

This article looks at how these four platforms perform when used by developers building real projects. A practical, side-by-side analysis — the next section will show the experiments.

Part 1

1. How each tool approaches AI-assisted development

Cosine

Cosine isn’t an IDE assistant. It runs inside your delivery pipeline, opening evidence-rich pull requests with tests, policy checks, and rationale for reviewers. It’s designed for teams — especially those with CI, security, or compliance requirements. Cosine’s architecture lives inside your boundary (on-prem or VPC) and integrates with your repos and CI/CD, so it improves merge rate and build reliability without sending code outside the org. More details here.

Cursor

Cursor extends VS Code with chat-based context awareness. You can ask it to refactor, debug, or scaffold features inline. It’s personal and immediate, ideal for developers working solo or in small teams. It handles local repos well but still relies on GitHub and manual deployment setups. Cursor shines in editing speed but doesn’t automate testing or review gates.

Claude (Sonnet 4.5)

Claude’s strength is reasoning — large context windows, clean explanations, and detailed responses. It’s excellent at understanding system-level architecture or generating well-commented code. But it lacks native repo or CI awareness. You can copy/paste between Claude and your IDE, but orchestration is manual. Great for design discussions, weaker for automation.

Lovable

Lovable is a little bit different from the 3 tools mentioned above. It aims for instant gratification. You describe the app you want (“a blog with CMS”), and it scaffolds, builds, and hosts it. It’s built for speed — the “vibe-coding” experience. However, its architecture is more opaque, debugging happens after deployment, and version control is… let’s say not first-class. Perfect for prototypes, less fit for sustained team iteration.

2. Collaboration and team workflows

AI adoption today is highest in code drafting (82%) but lowest in testing and review (13%) - source. Cursor and Lovable live in that first category — great at creation, limited beyond it. Cosine sits in the second: review, CI, and policy-aware automation.

That distinction matters for teams. In Cosine, agents attach rationale, test results, and security evidence to each pull request. Reviewers get proof and context, not just code. Cursor and Lovable still expect humans to run tests, interpret results, and fix inconsistencies. Claude helps spot logical issues but doesn’t manage artifacts or pipelines.

For team use, where every commit goes through CI, tests, and approvals, Cosine’s orchestration approach directly targets throughput.

3. Security, audibility, and trust

Security remains a pain point for AI-assisted code. Studies cited in The AI Adoption Paradox show that developers using generic assistants often ship less secure code while feeling more confident about it.

Cosine’s design avoids that trap. Because it runs within your infrastructure and attaches audit trails to every AI-touched change, reviewers can trace provenance and compliance.

Cursor, Lovable, and typical Claude usage rely heavily on cloud-based processing and infrastructure. For open or internal projects this is usually fine. But in regulated environments, where you need strict control, audit trails, data residency, or compliance, that architecture introduces extra friction, something tools that run inside your boundary are better suited to manage.

When trust and reproducibility matter, Cosine’s model of “policy-aware autonomy inside your boundary” is the only one aligned with enterprise standards.

4. The bottom line

Tool | Strength | Limitation (with nuance) | Best for |

Cosine | Policy-aware orchestration, audit trails, test-backed PRs, deeply integrated with CI/CD and internal workflows | Requires initial repo/CI setup; learning curve for agents/rules | Teams that prioritize throughput, security, compliance, scalability |

Cursor | Great inline coding, multi-file edits, and team governance (Team Rules, SSO, user roles) | Doesn't yet manage full delivery pipelines or policy enforcement out-of-the-box | Teams wanting AI inside IDE + incremental governance |

Lovable | Fast scaffolding, built-in deploy & preview, full-stack MVP support | Less mature for complex iterations or enterprise governance | Rapid prototyping, demos, early-stage features |

Claude / Claude Code | Strong reasoning, architecture guidance, deep context understanding | Not designed to manage repos, CI, or audit flows by itself | Strategy design, prototyping, architecture assistance |

Cursor and Lovable are impressive. For reasoning, Claude wins. But for sustained, auditable, team-level delivery, Cosine is the clear outlier — it operates not in the IDE, but through the entire pipeline.

Speed feels good. Throughput scales.

Part 2 - Putting AI Coding Tools to the Test

In this second part we’re going to attempt to compare these four tools, as a first time user, by giving them the same prompts.

In this second part, we move from theory to practice. The goal: see how Cosine, Cursor, Claude, and Lovable behave when a first-time user gives them the same prompts, just the real onboarding and build experience.

Prompt 1:

⇒ Build a static blog site with Next.js. Posts should be in Markdown files. Generate pages for each post. Add a homepage listing all posts. I should be able to see this online without downloading any files...

Prompt 2:

⇒ Let the user add new articles

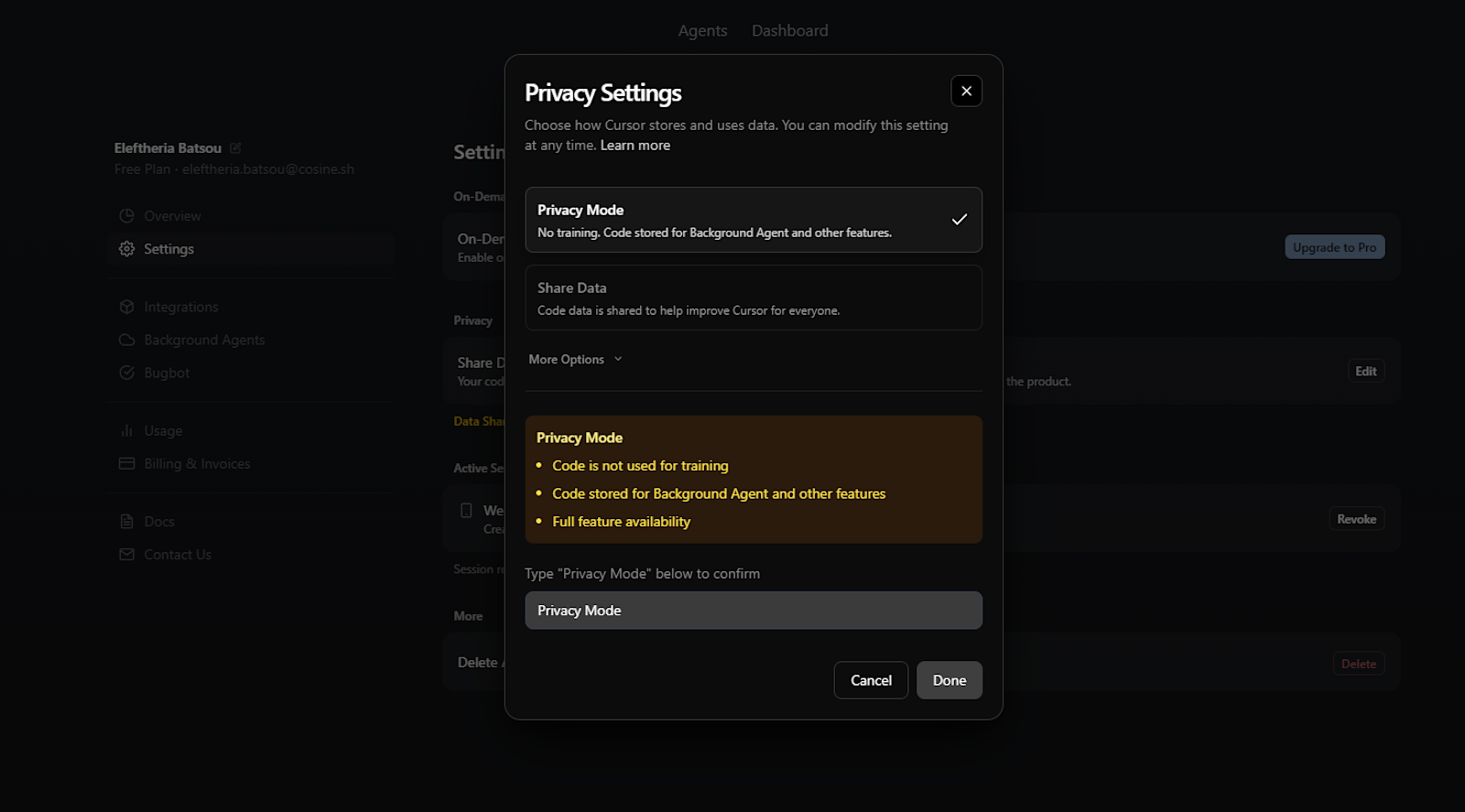

Cursor - Prompt 1:

Setup took longer than expected.

After registering, connecting GitHub, selecting the free plan, and enabling privacy mode, the web version required a new repository before prompting.

From prompt to deployment: ~20 minutes using GPT-5, plus a manual Vercel connection. The generated blog looked good — arguably the most detailed one — but the time cost for a simple scaffold was high.

Later, repeating the same flow inside the Cursor IDE felt faster (~5 minutes) but produced a simpler result (more on this below).

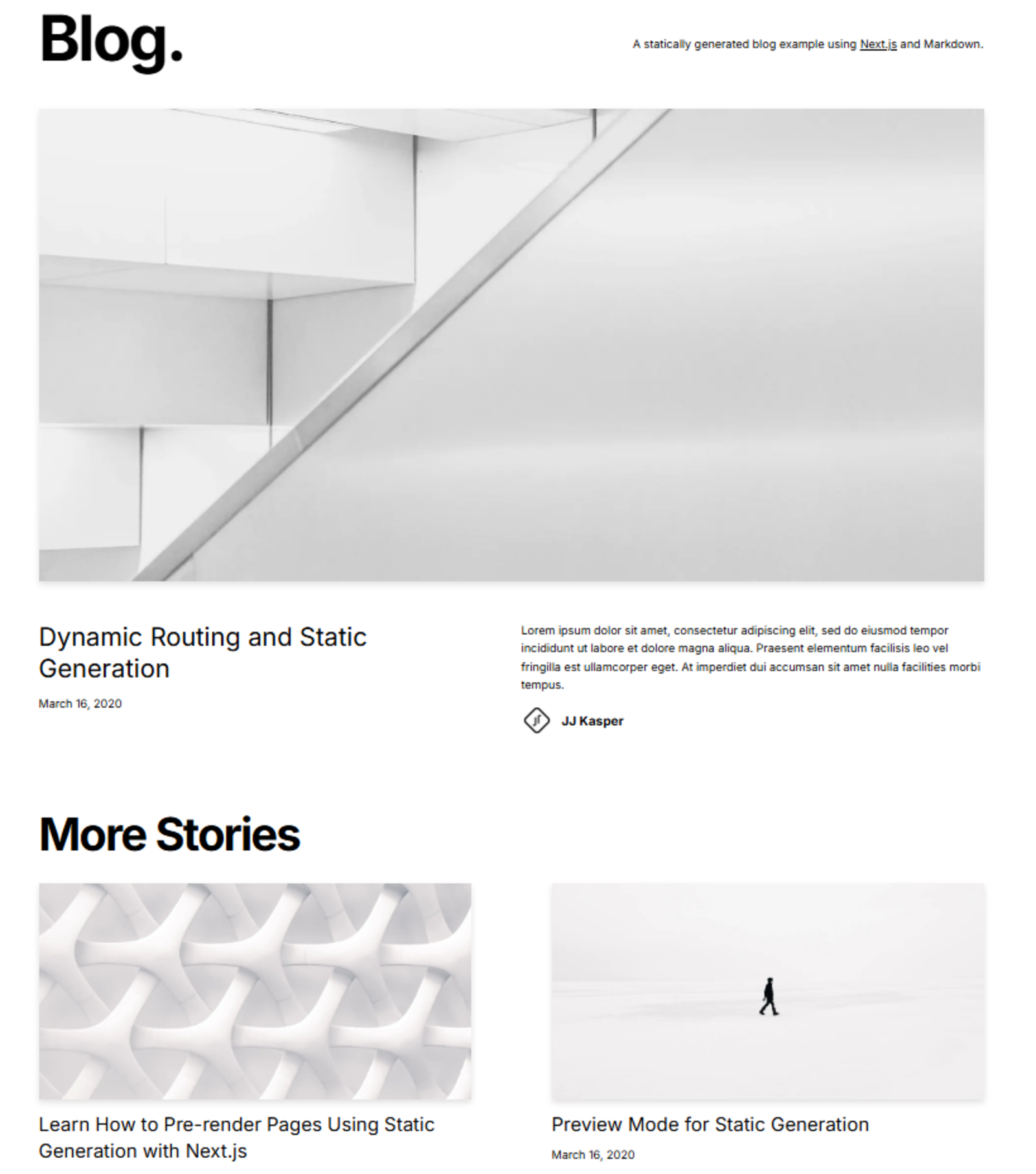

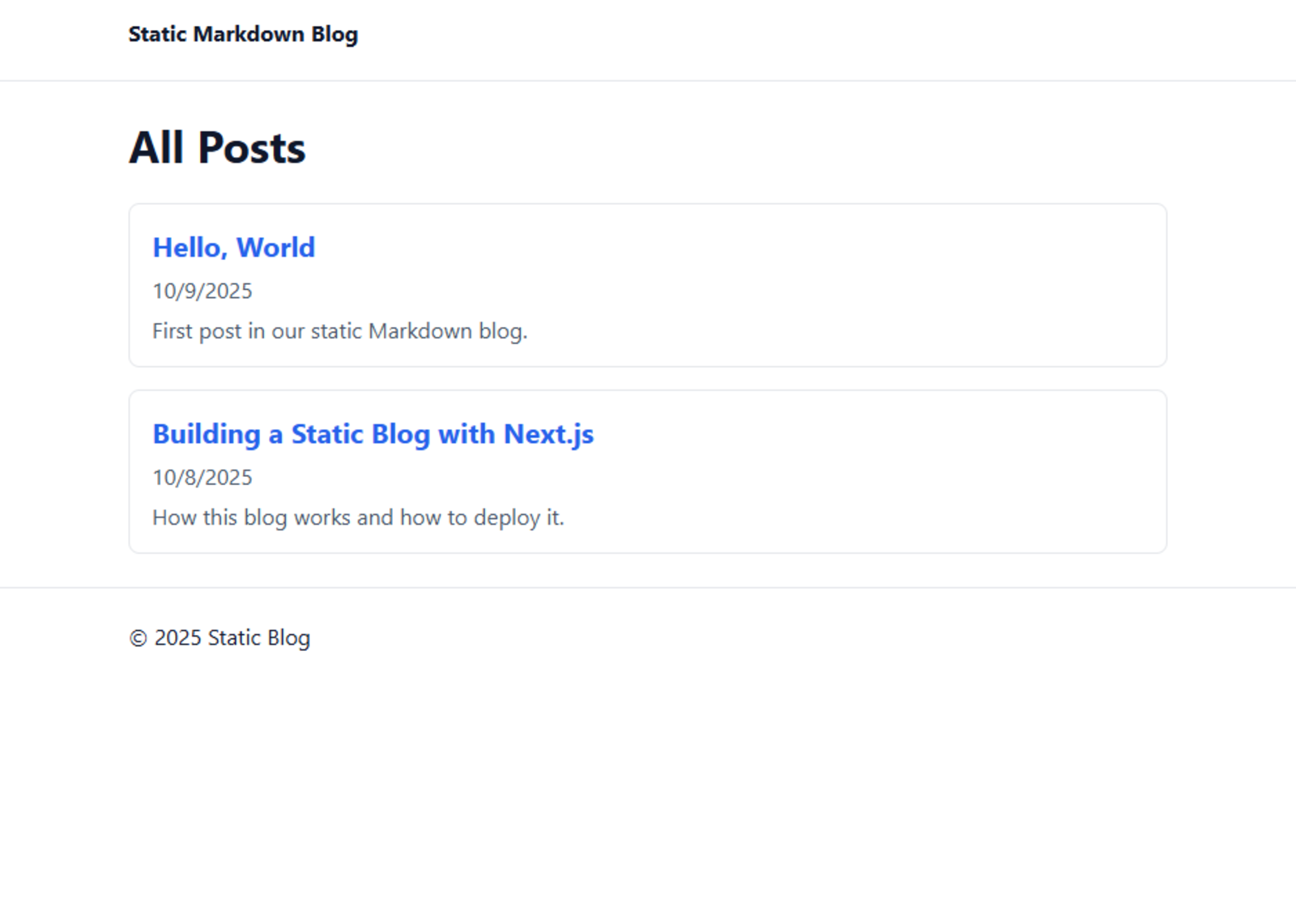

Claude - Prompt 1:

The process was friction-free. Registration, GitHub connection (optional), and prompting took 1–2 minutes. Claude quickly scaffolded a clean static blog without requiring external deployment setup. The experience felt smoother than Cursor’s.

Overall: fast, elegant, but credits can limit iteration on the free plan (more on this below).

Here you can see the result, done with Sonnet 4.5.

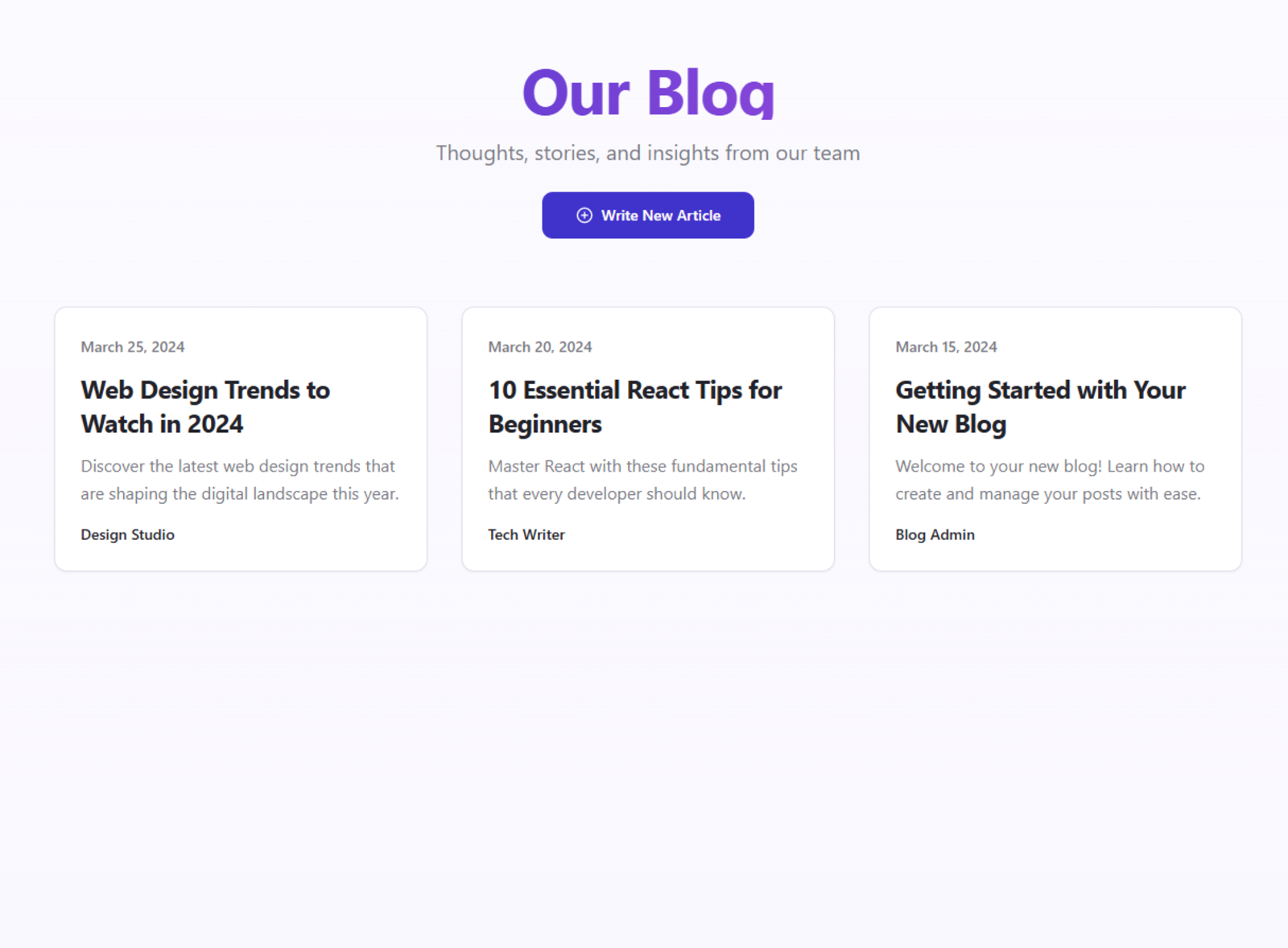

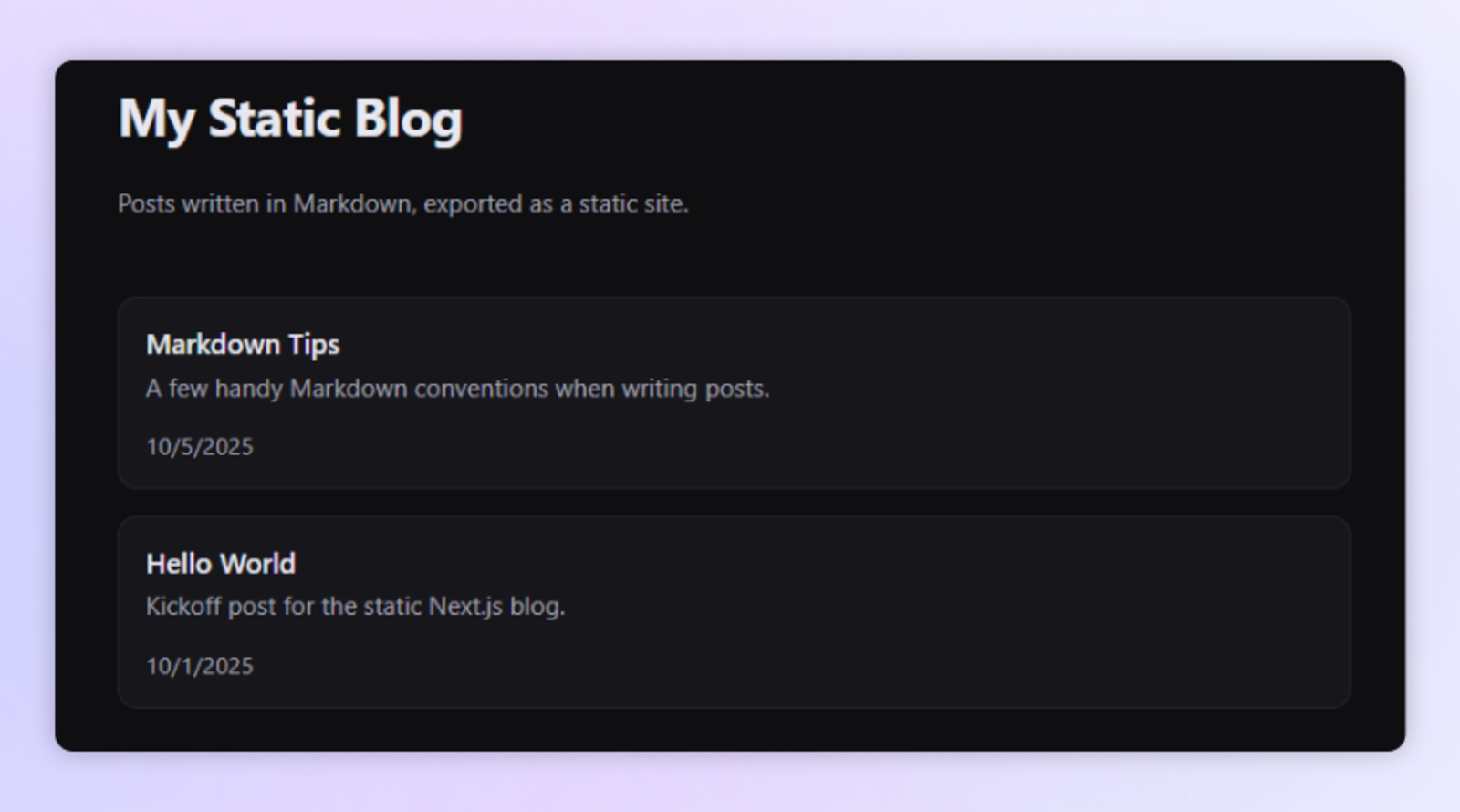

Lovable - Prompt 1:

Lovable lives up to its reputation for speed. After signing up, one short prompt generated a full working blog in 1–2 minutes. No GitHub or CLI needed — everything happens on the web.

A minor error popped up, but clicking “Fix the error” solved it automatically in about a minute. Lovable doesn’t let you choose the model or fine-tune behavior, but it delivers an instant result with deployment and preview URLs ready.

Perfect for quick prototypes - limited control beyond that.

You can see the result here.

Note: Lovable has only a web version, no IDE or CLI.

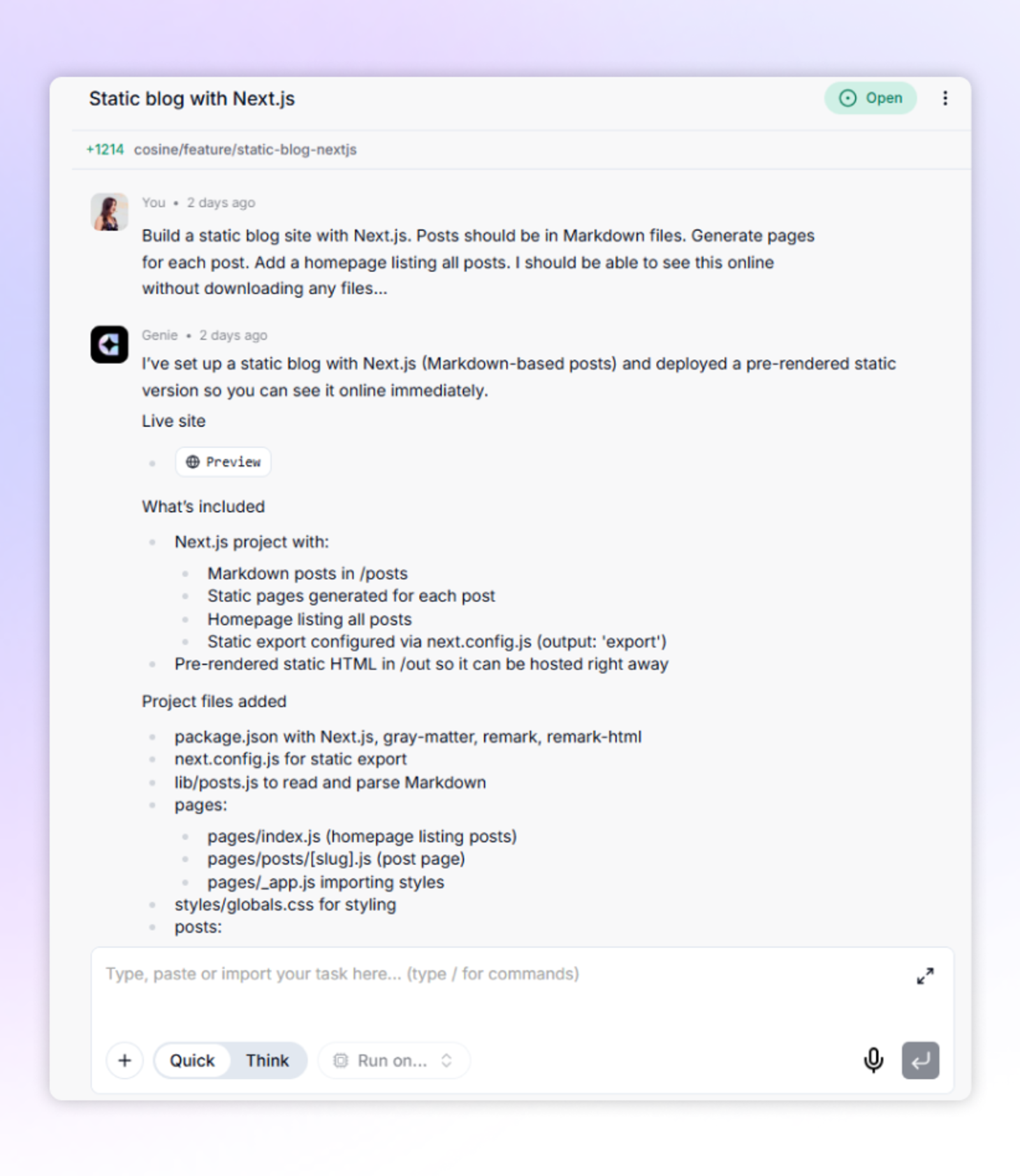

Cosine - Prompt 1:

Setup is simple: register, choose a plan, start a new project. GitHub and Vercel connections are optional. We used the web version, though Cosine also provides a CLI for local workflows.

After prompting, 1–2 minutes later we had a working blog scaffolded with Cosine’s in-house model Genie GPT-5.

The code was clean, ready to run, and version-controlled — no external hosting steps required.

Here is the result:

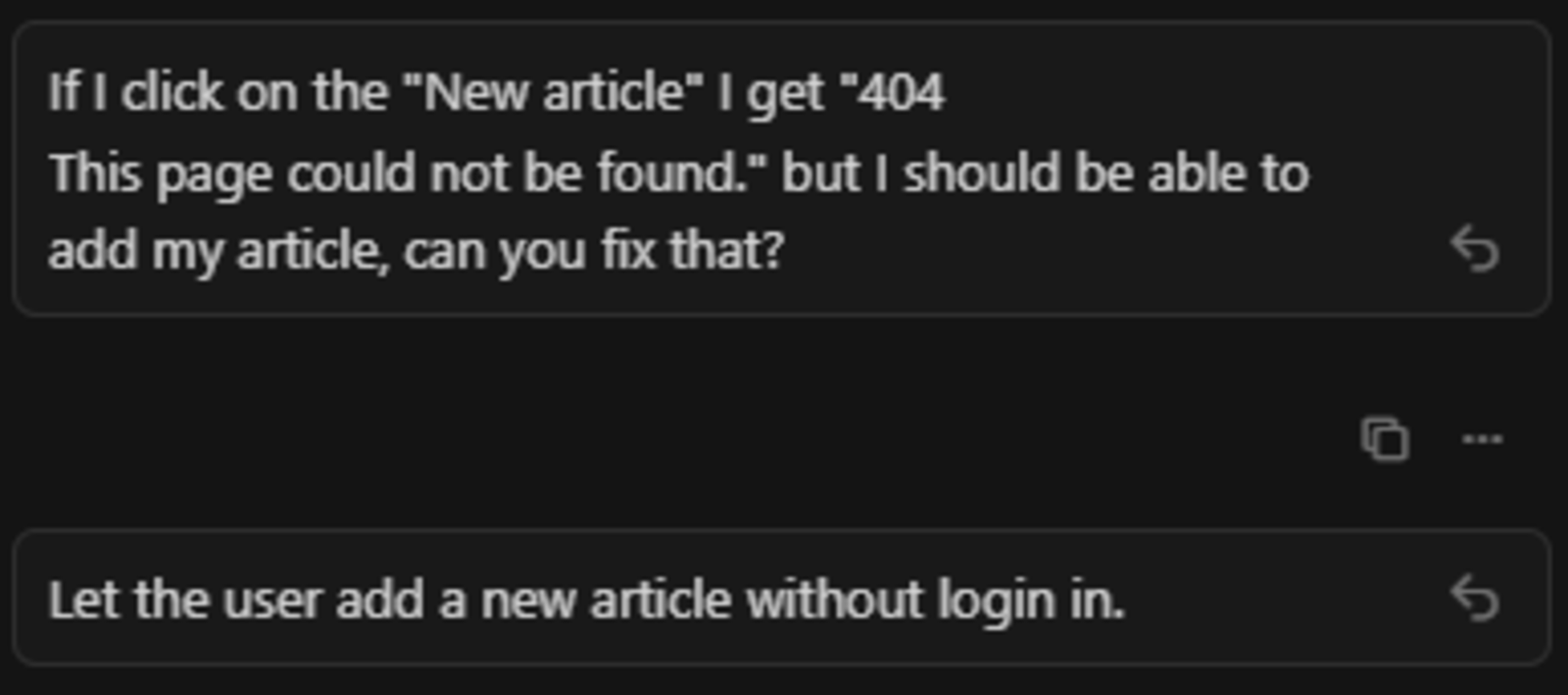

Cursor - Prompt 2

This is where things broke down.

Using the web version, the second prompt repeatedly stalled, so I switched to the IDE. Starting fresh with a new repo, GPT-5, I got a new blog (way simpler from the web’s version although the prompt was the same) — build time around 5 minutes — but the feature never worked as intended.

I prompted: “Let the user add new articles” and I never managed to get what I asked for.

Attempts to fix it led to a cycle of “404 page not found” errors. Ten prompts later, Cursor still couldn’t create and publish a new article from the UI. Eventually, I moved on.

I have more details in the video, but here you can see some of the fixes I asked it to do:

With Cursor, I created a new article, but never managed to publish it.

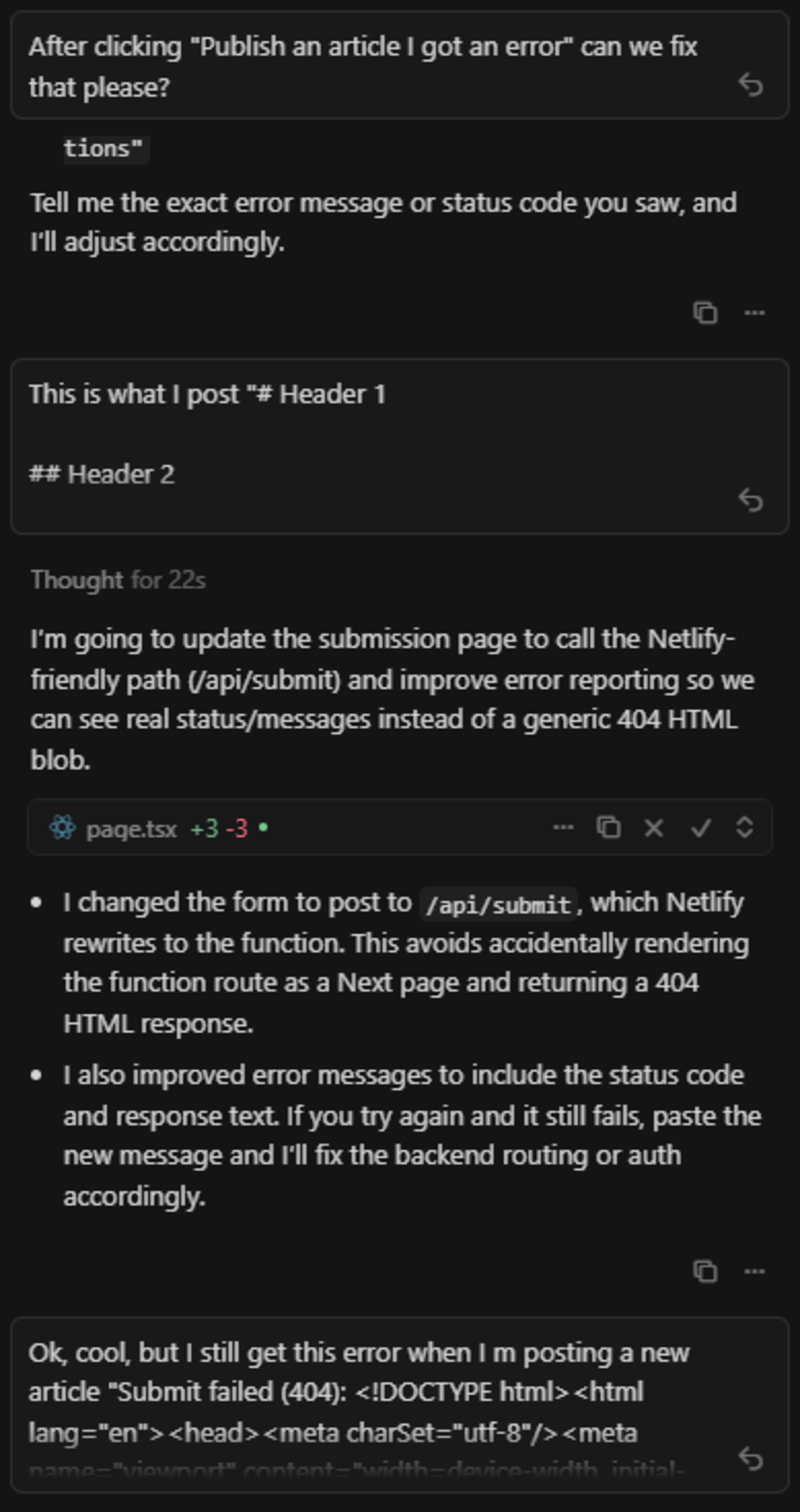

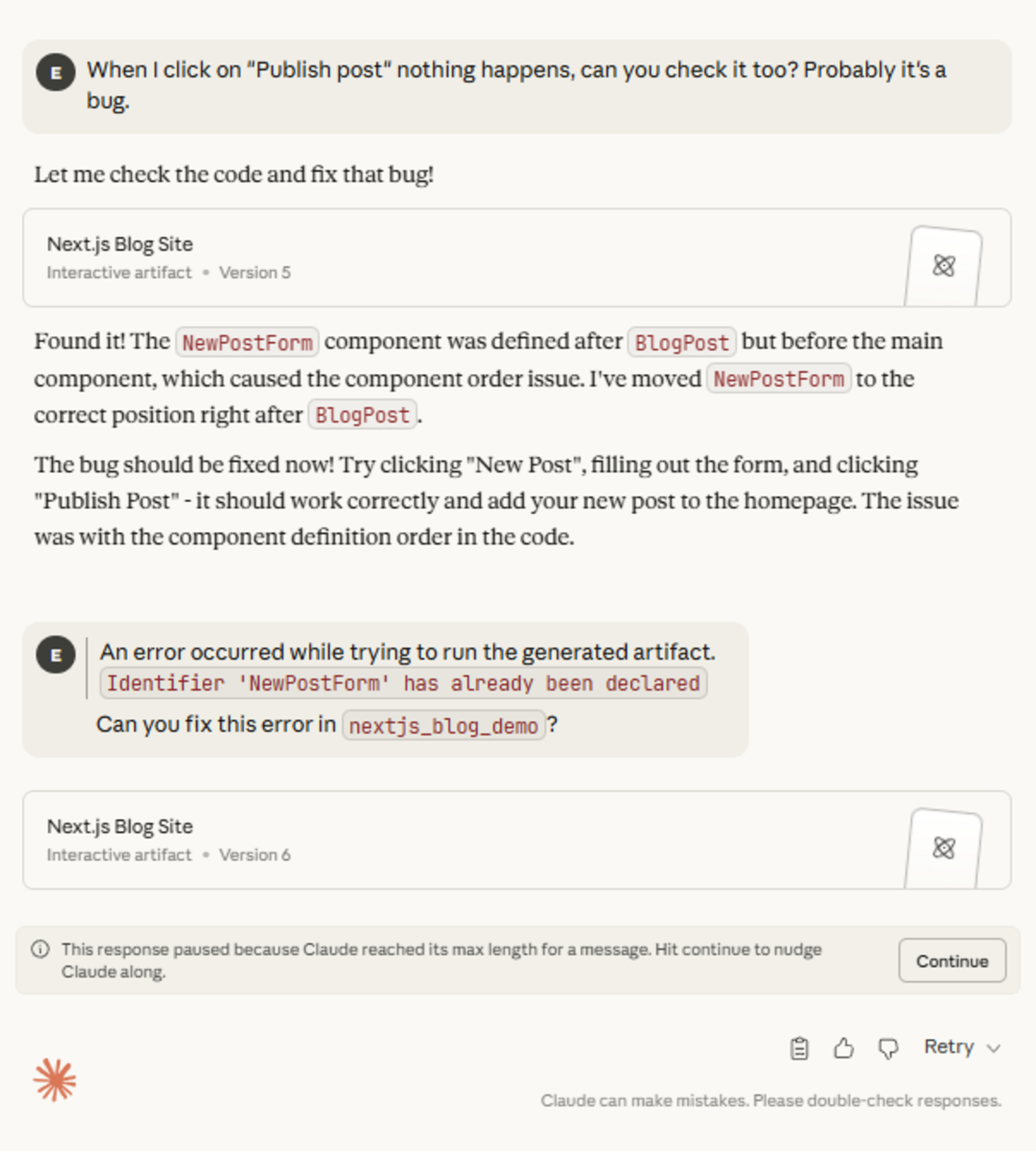

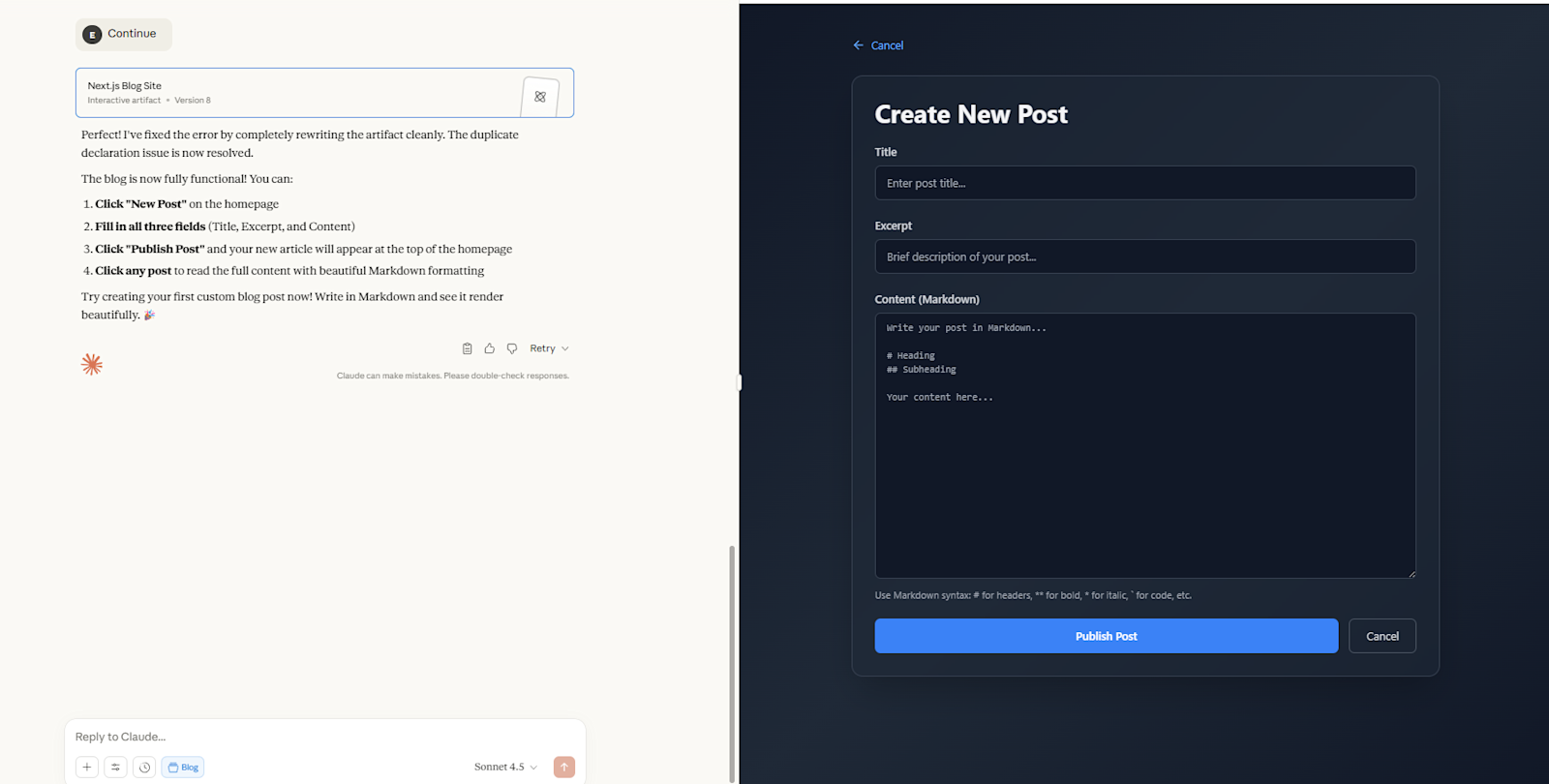

Claude - Prompt 2:

Claude built the new article flow fast, but hit a publishing bug. Interestingly, it identified the bug itself, proposed a fix, and asked for confirmation.

After three prompts — create blog page, enable article creation, fix bug — my free credits had finished, but they were going to get renewed after a few hours! I’d expect to be able to do more, even if I wanted to purchase Claude Code, I’d first like to test it thoroughly, with 3 credits you cannot do that ~ but in this article we’re not focusing on the prices!

After fixing that bug, everything worked correctly! Here is the final result.

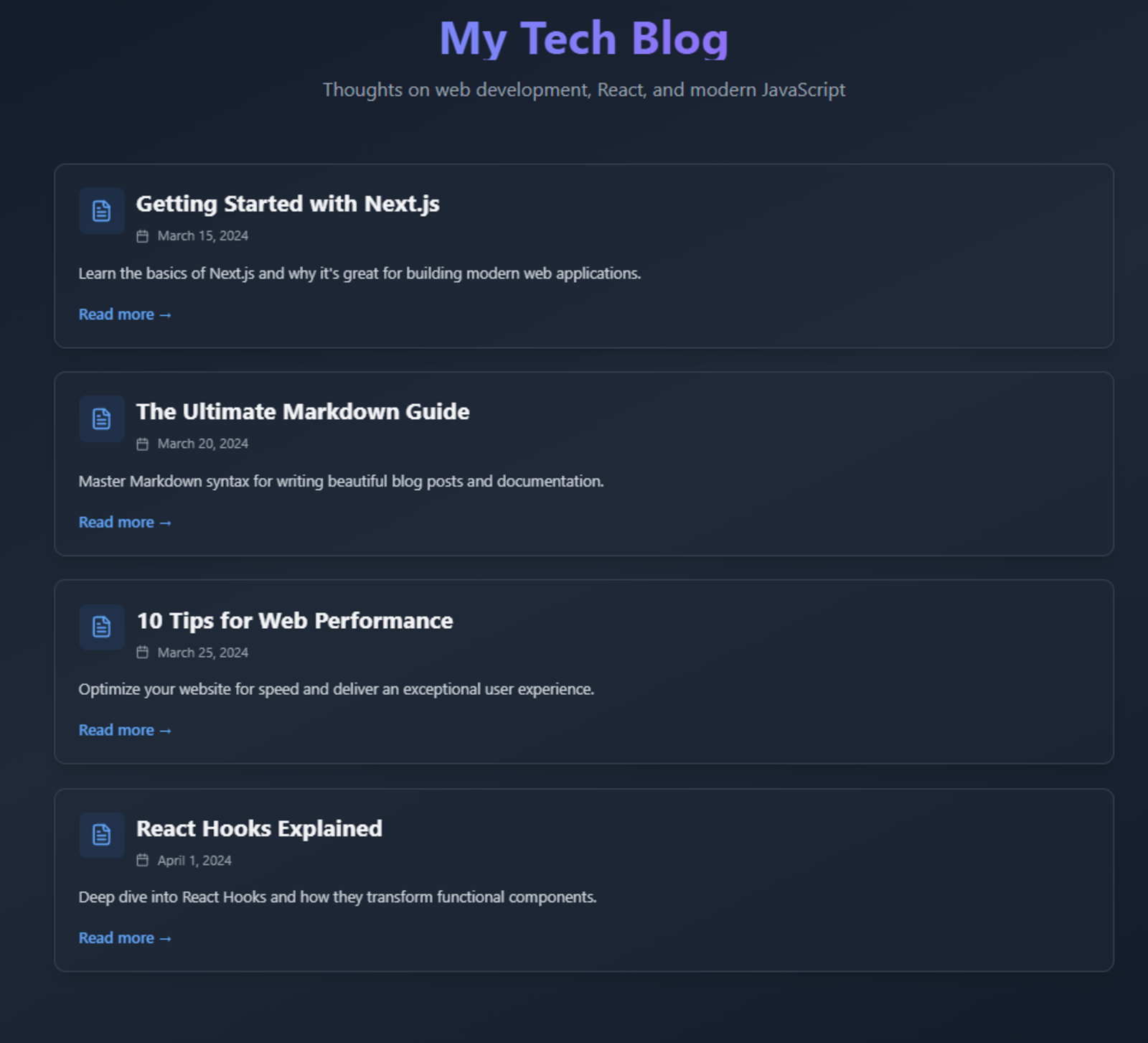

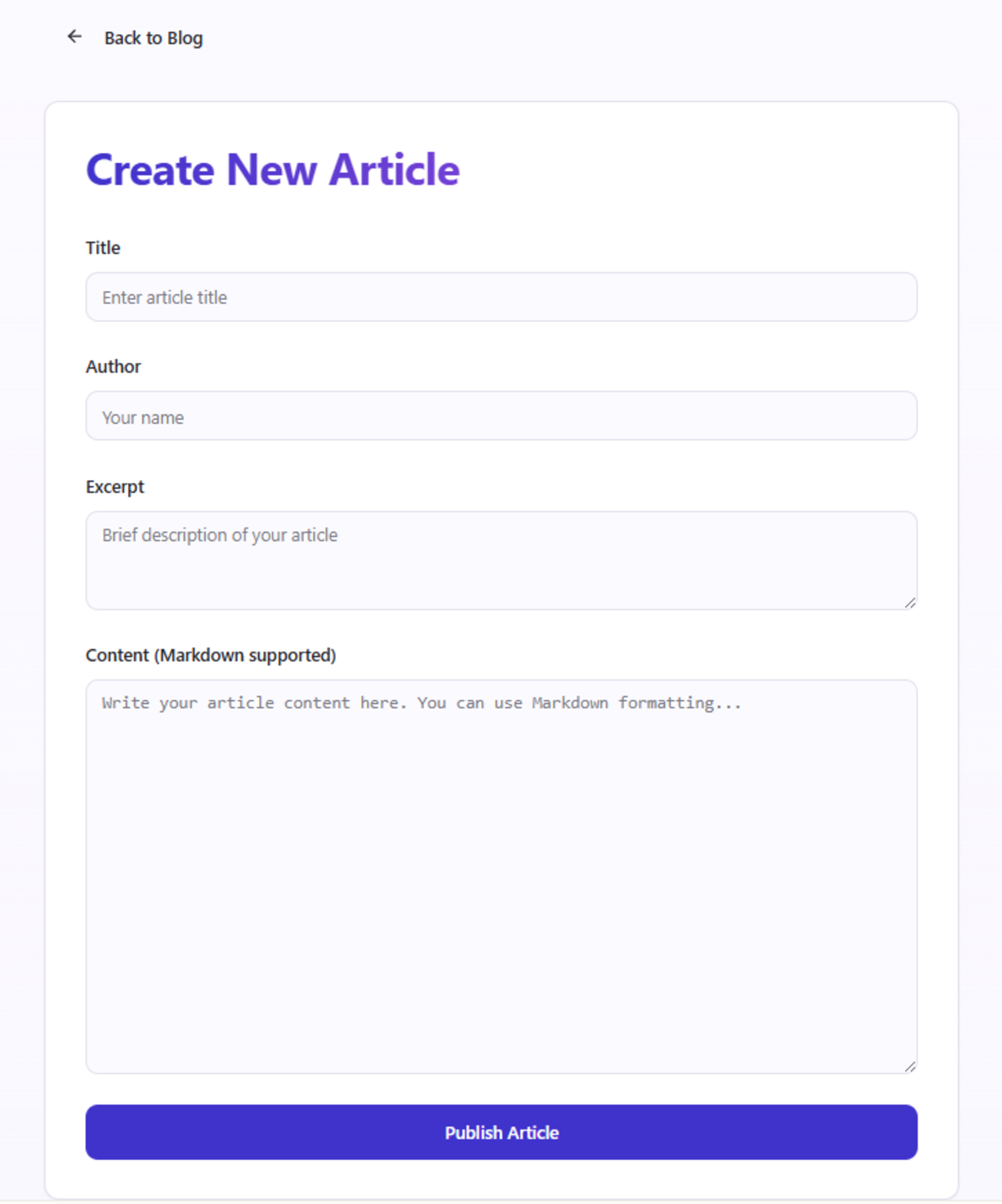

Lovable - Prompt 2:

Lovable again produced results quickly. It detected a minor issue, prompted to repair it, and resolved it automatically.

You can check the full page here.

The “Add Article” flow worked end-to-end with minimal supervision.

Toward the end we almost ran out of credits, but the test completed successfully — smooth experience overall.

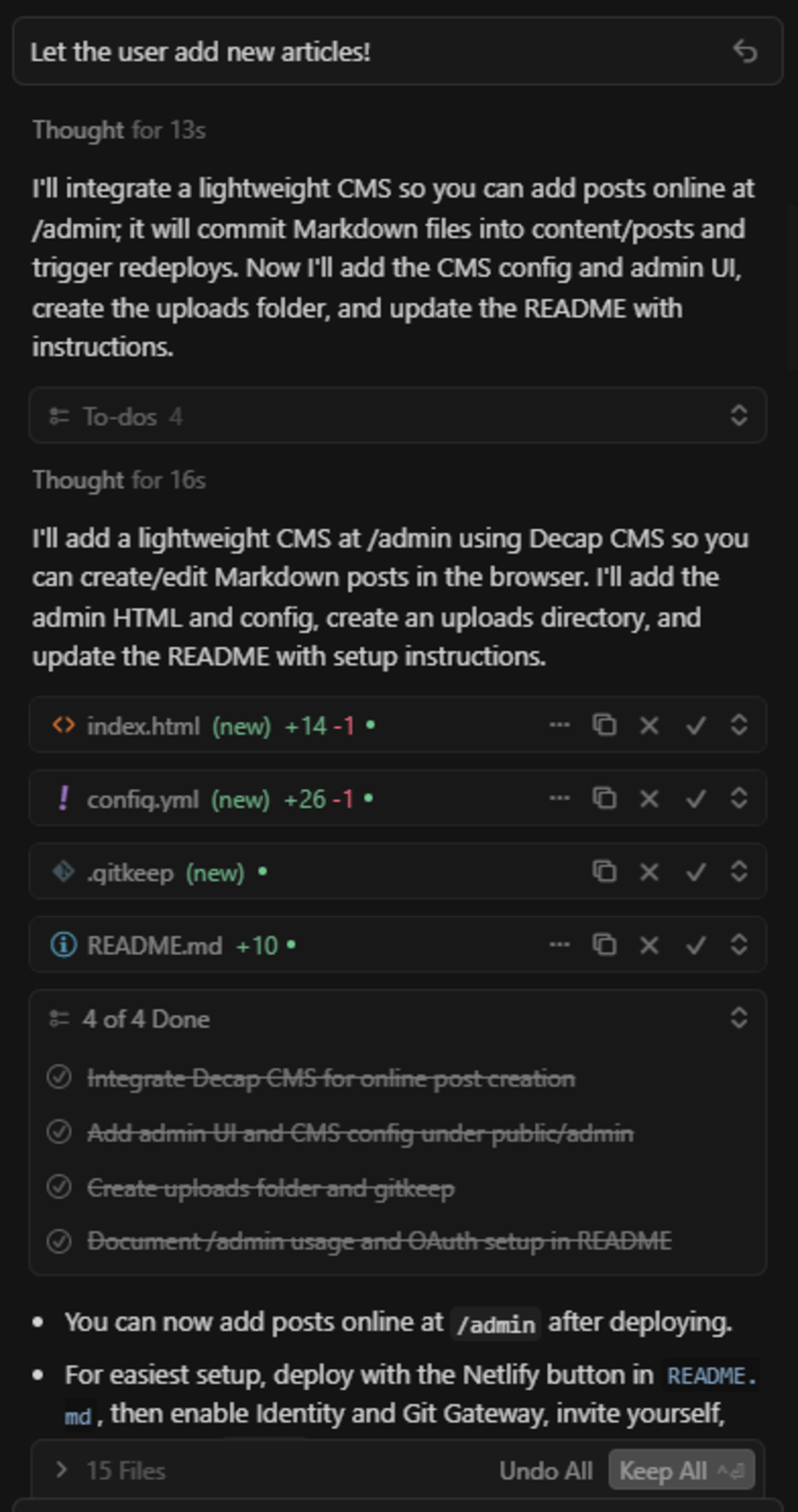

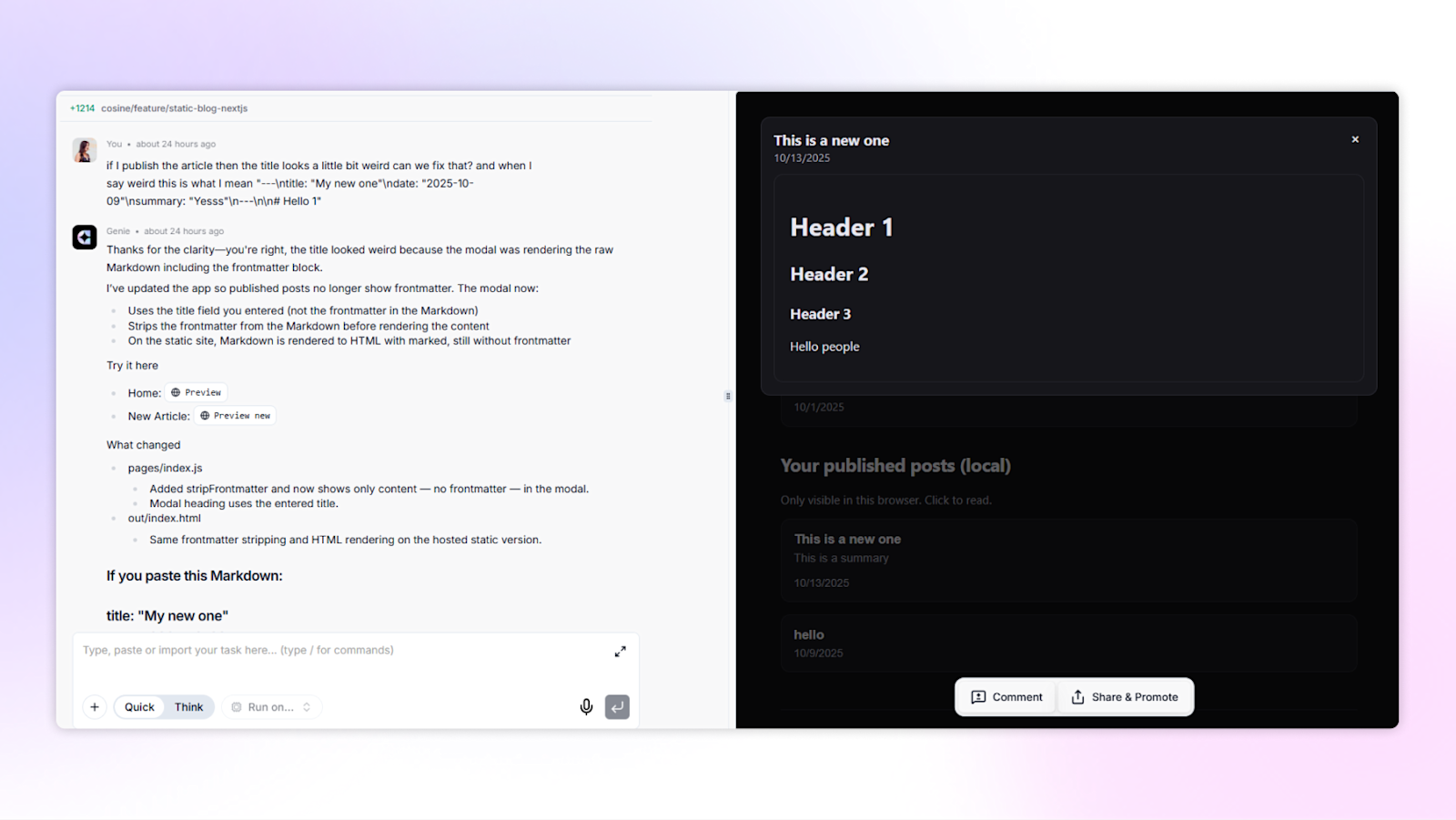

Cosine - Prompt 2:

Cosine handled the second prompt with one short correction. The first attempt rendered markdown in the published post, one follow-up prompt fixed it cleanly.

Here’s the final result. You can also find the code on GitHub. The whole process was very smooth and easy.

Final Thoughts

Each tool showed its strengths:

Cursor offers power inside the IDE but still feels heavy on setup and inconsistent across flows.

Claude impresses with reasoning and quick scaffolds but needs higher credit limits for iterative work.

Lovable wins on simplicity — the fastest from prompt to hosted app — yet sacrifices transparency and control.

Cosine balances speed with structure, producing ready-to-review code that fits real-team workflows.

Conclusion

The results from both parts tell a clear story: AI tools are evolving fast, but speed alone doesn’t equal delivery. For teams that care about trust, CI/CD alignment, and measurable throughput, Cosine’s orchestration model proves more reliable.

The best tool isn’t the one that writes the fastest line of code — it’s the one that helps your team ship it.

@BatsouElef

@BatsouElef