The AI-coding race has moved beyond chatbots. Developers now expect AI to live where they actually work — in their IDEs. Tools like Cosine, Codex, and Windsurf all promise to boost productivity from inside (or alongside) VS Code.

The question we’re exploring is simple: Which one actually improves the developer experience in practice?

This article looks at how these 3 platforms perform when used by developers building real projects. In the second part, you can read 2 use cases: building something from scratch and adding a feature to an open source project.

Part 1:

1. At a glance - In VS Code

Codex – OpenAI’s coding assistant built into the ChatGPT Pro ecosystem. You need a paid ChatGPT Plus or Team plan. Codex uses a specialized model variant — currently called GPT-5-Codex — which is optimized for coding tasks. It integrates into VS Code via the ChatGPT extension, enabling code generation and refactoring from within your editor.

Windsurf – An AI-powered IDE from Replit’s founders (ex-Cursor engineers) designed for real-time debugging and self-healing builds. Windsurf runs multiple model back-ends, it isn’t locked to a single one, and includes both proprietary models (SWE-1 family) and major third-party models (GPT-5, Claude, etc).

Cosine – A VS Code extension that connects directly to the Cosine CLI, giving teams policy-aware AI inside their boundary. It adds a “✦ Cosine Changes” panel, streams diffs live, and can apply, explain, and validate code changes without leaving the editor. Cosine runs its own Genie GPT models, tuned specifically for engineering workflows, with variants optimized for test generation, refactoring, and security remediation, and operates entirely inside the user’s environment.

2. Setup and onboarding

Codex requires authentication with OpenAI and GitHub. You create or open a folder before prompting, and it will build your project there. The first run feels similar to working in ChatGPT, but with your files accessible to the model.

Windsurf installs as a standalone IDE or as a VS Code extension, no accounts or external linking needed. It launches immediately, runs local builds, and even asks whether it should handle server startup for you.

Cosine’s VS Code extension auto-detects the CLI (you need to download Cosine’s CLI before starting using the extension). It shares lightweight editor context (file + selection), launches a Cosine terminal, and streams AI-generated diffs into a dedicated tree view. No cloud setup, no external API key — everything executes inside your environment.

3. Developer experience

Codex works best as a conversational assistant: “Generate,” “fix,” “refactor.” It can write complete apps, but guidance and local-run instructions are often missing. For example, you’ll need to ask for deployment steps manually.

Windsurf stands out for hands-on interactivity. When a local build fails, it auto-suggests sending console errors back to the AI, fixes them, and re-runs automatically. It’s the most “agentic” experience of the three — great for individuals who want instant feedback loops.

Cosine, by contrast, emphasizes clarity and auditability. It previews proposed edits as diffs before applying them, provides explanations inline, and logs every AI-touched file. For teams, that matters more than speed: you can trace, approve, or revert with a click.

4. Collaboration and trust

Cosine’s agent model ties into repositories and CI pipelines, every change is review-ready with rationale and test evidence. For regulated or enterprise work, Cosine’s “inside-your-boundary” architecture avoids cloud data exposure. For personal or experimental coding, Windsurf feels lighter and more spontaneous.

5. Early takeaways

Tool | Strength | Limitation | Best for |

Cosine | VS Code integration with live diffs, repo context, and policy-aware edits | Requires CLI setup | Teams prioritizing traceability, review quality, and CI alignment |

Codex | Solid code generation integrated into ChatGPT Pro | Paid plan required, minimal run/test guidance | Individuals already using ChatGPT Pro for rapid prototyping |

Windsurf | Fastest “run-and-fix” loop, self-healing local builds | Limited CI features | Developers wanting instant feedback and quick debugging |

The first impressions tell a clear story: Codex writes, Windsurf runs, Cosine delivers structure.

In Part 2, we’ll put all three through two practical scenarios: building a new to-do app with CSV export and extending an existing open-source project. Let’s see how they perform under real developer workflows.

Part 2 Hands-On: Building and extending projects with Codex, Windsurf, and Cosine

In this section, we put the three tools to work under real conditions. Two short scenarios were used to evaluate setup, reliability, and iteration speed:

Build a new to-do app with a CSV export option.

Extend an existing open-source “Music Analyzer” project with a visual overlay feature.

All experiments were run in fresh environments, using default settings.

Scenario 1 - Building from scratch: to-do app + CSV export

Codex

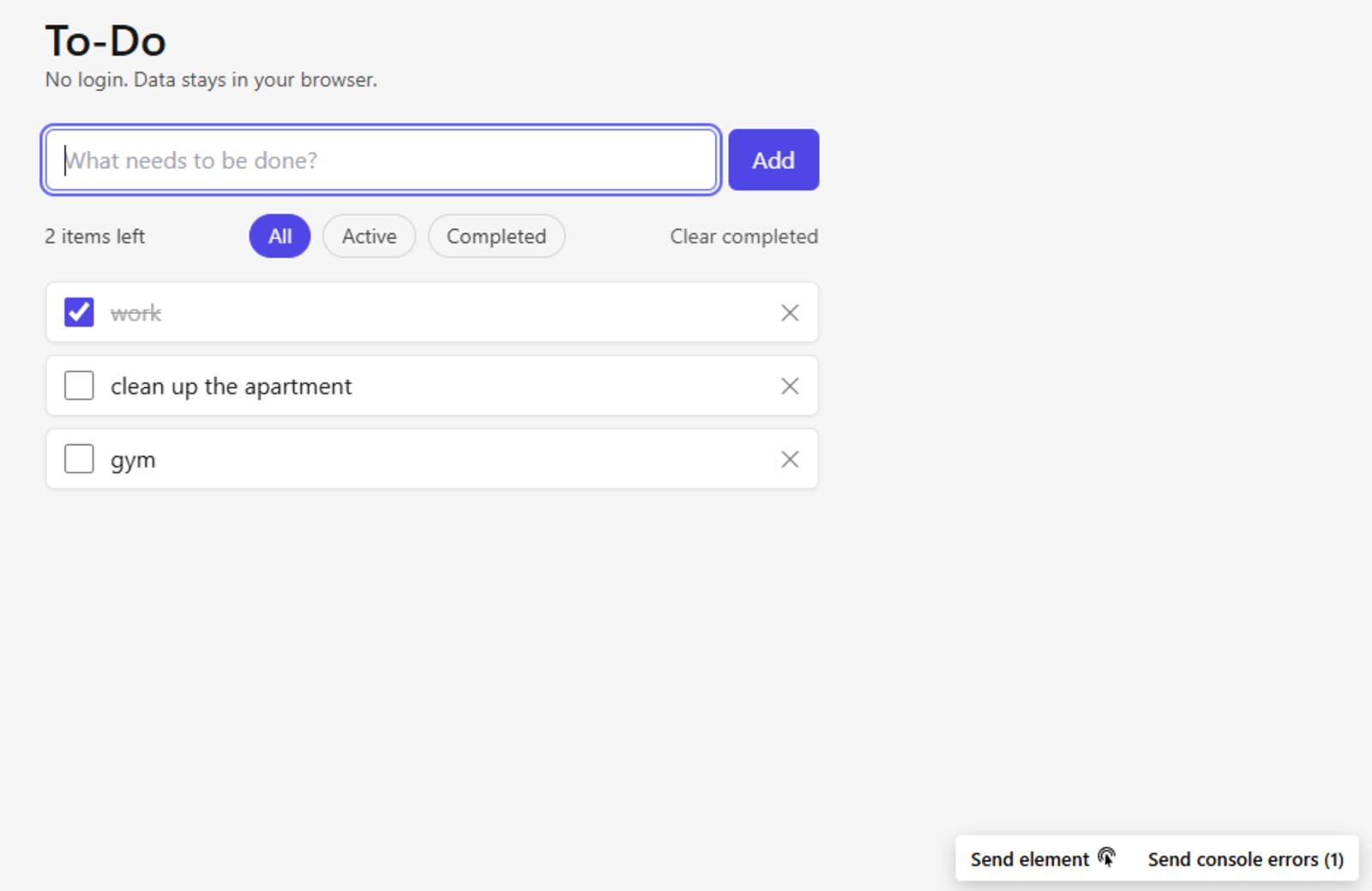

Setup required an active ChatGPT Plus / Team subscription and connection to GitHub. The extension must run inside VS Code with a folder/repo. Prompting “Create me a to-do app with React. No login is needed. I want to run it on Vercel or localhost.” produced a clean scaffold, though Codex didn’t explain local or Vercel deployment until explicitly asked.

Feature prompts behaved consistently:

Add filters → completed automatically. I wanted to ask “Active”, “Completed” and “All” tasks tabs, but it did it automatically with the 1st prompt.

Break something → I broke a few things intentionally from ‘app.jsx’, and I asked it to check again and fix the bugs. It quickly detected and repaired the errors.

Refactor → I also asked it to refactor the whole code and explain why it made those changes. It handled minor rewrites and offered little rationale. I guess since it had written all the code it didn’t have to refactor many functions, files, etc.

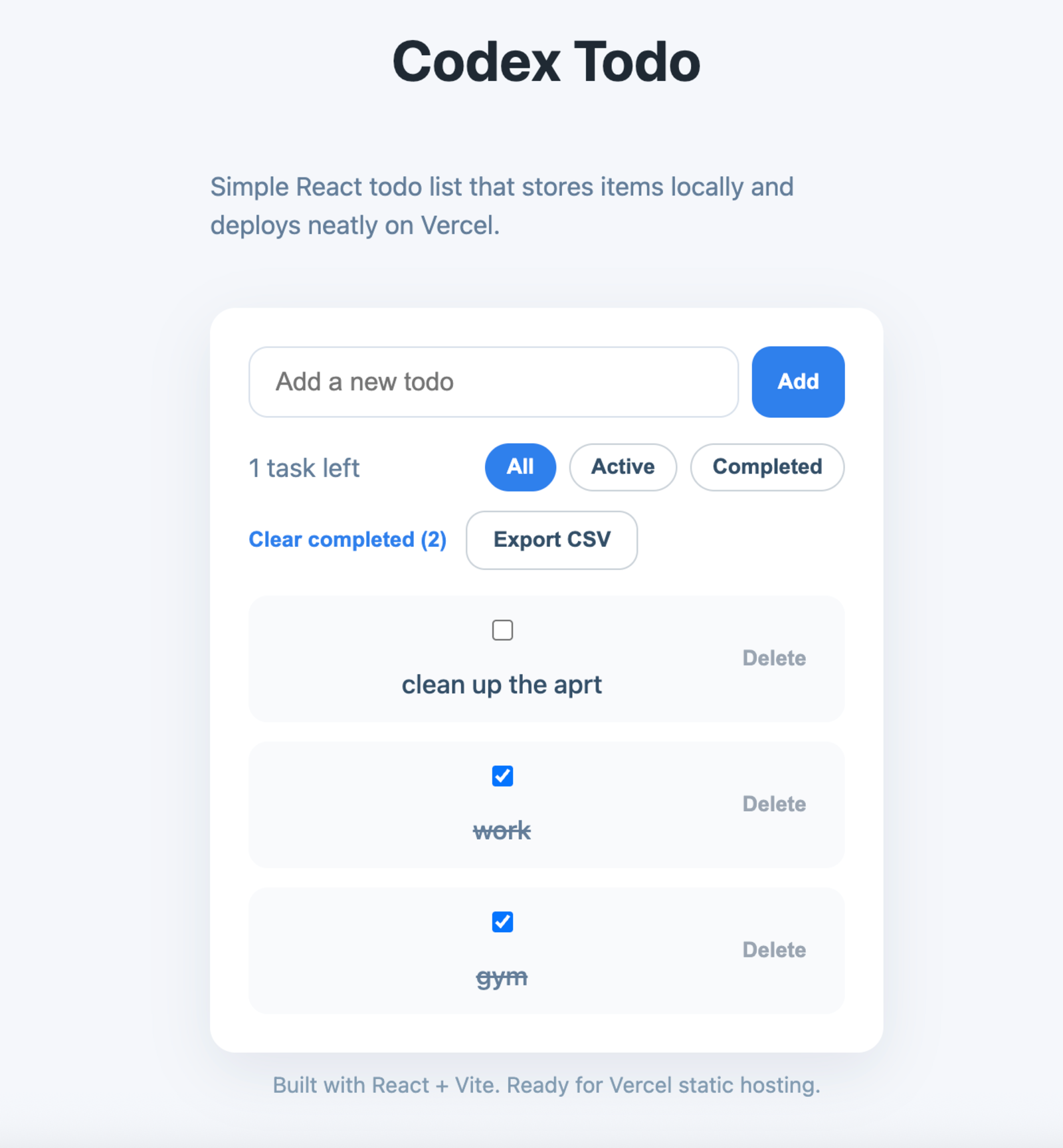

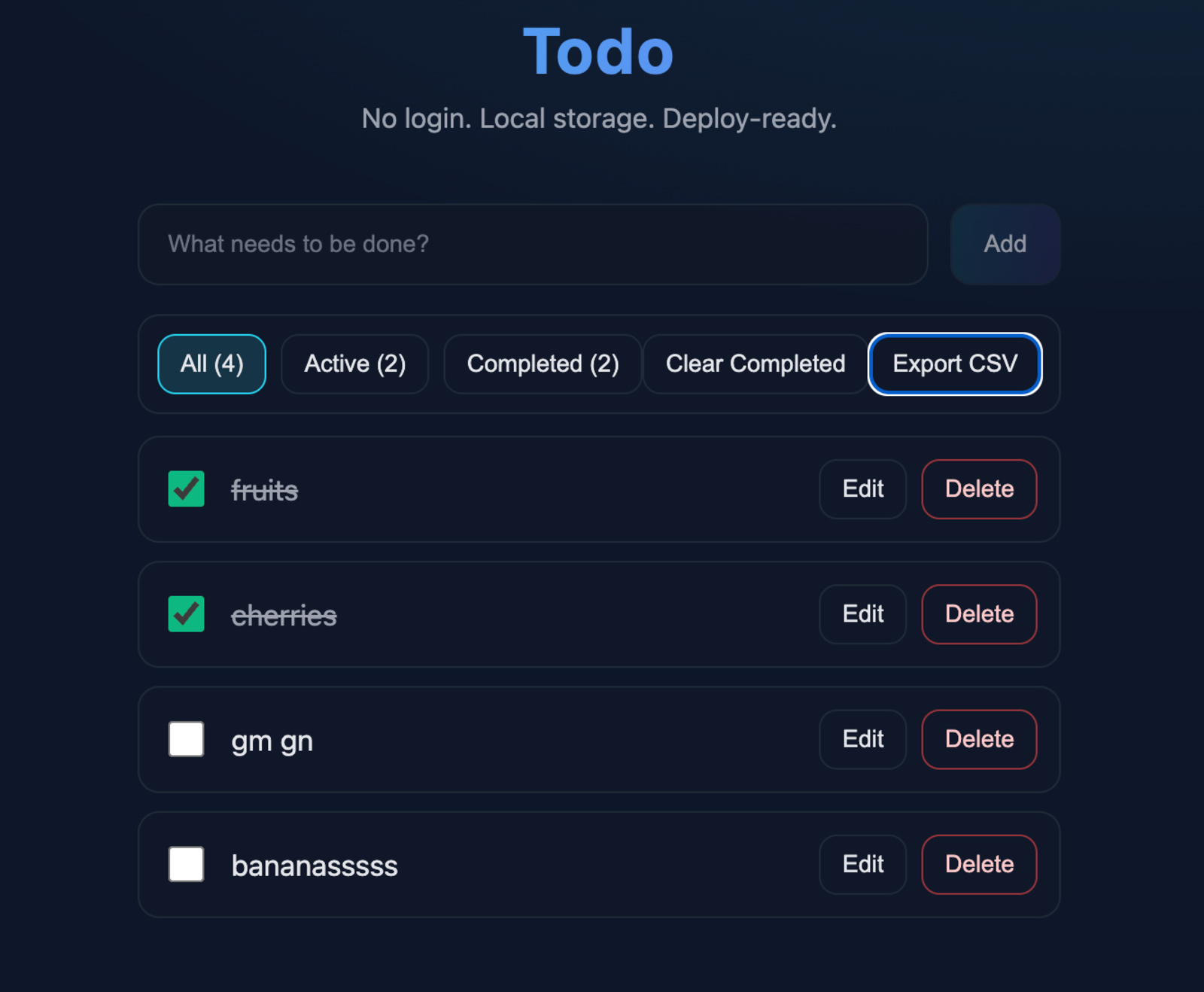

CSV Export → I prompt it to “Add a CSV export option. Users should be able to download their todos in a CSV file.” and it delivered correct functionality after a short delay. You can see the result in the image below.

Result: strong code quality, minimal guidance, good for experienced developers comfortable debugging on their own. The UI output wasn’t my favorite but in this experiment we don’t focus on that. As I mentioned in the introduction of the second part, I used the default settings which in this case the model was gtp-5-codex medium.

Repo: todo-Codex on GitHub

Windsurf

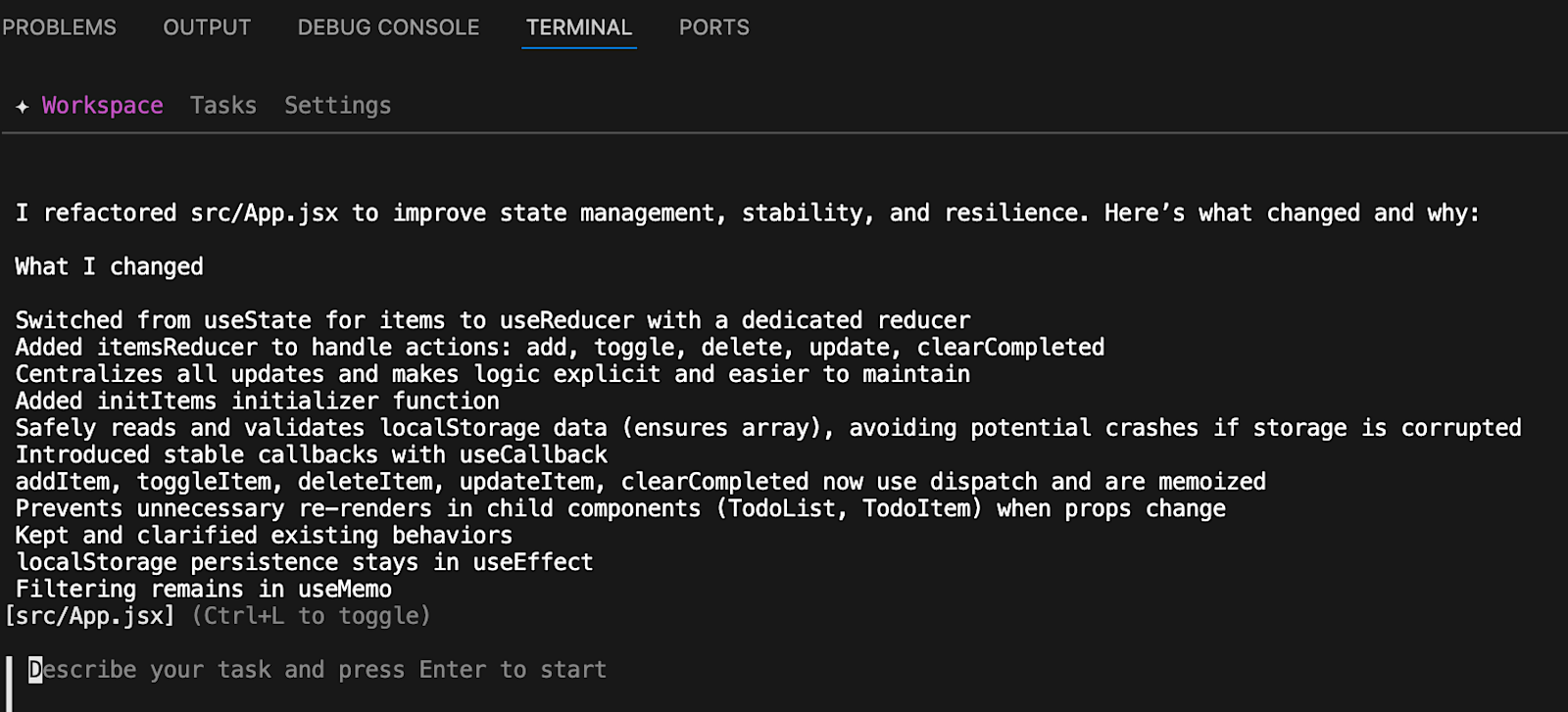

Installation was friction-free. You don’t have to connect to GitHub or start from a local project. After the first prompt, Windsurf immediately suggested how to run the app locally or deploy via Vercel / Netlify, something Codex skipped.

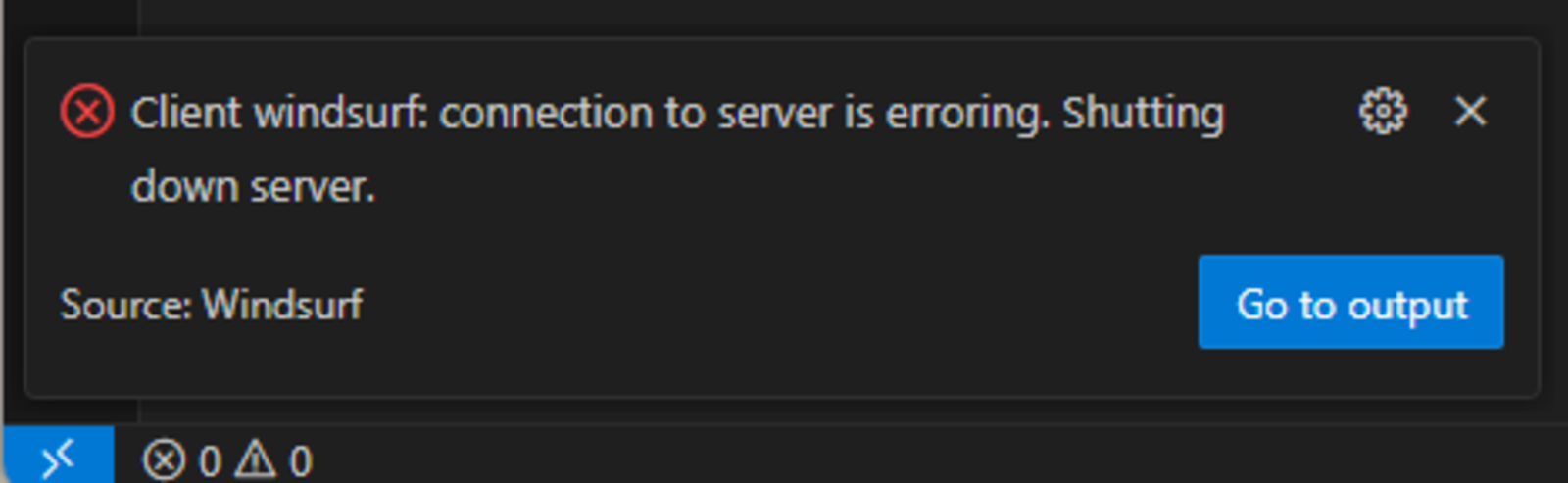

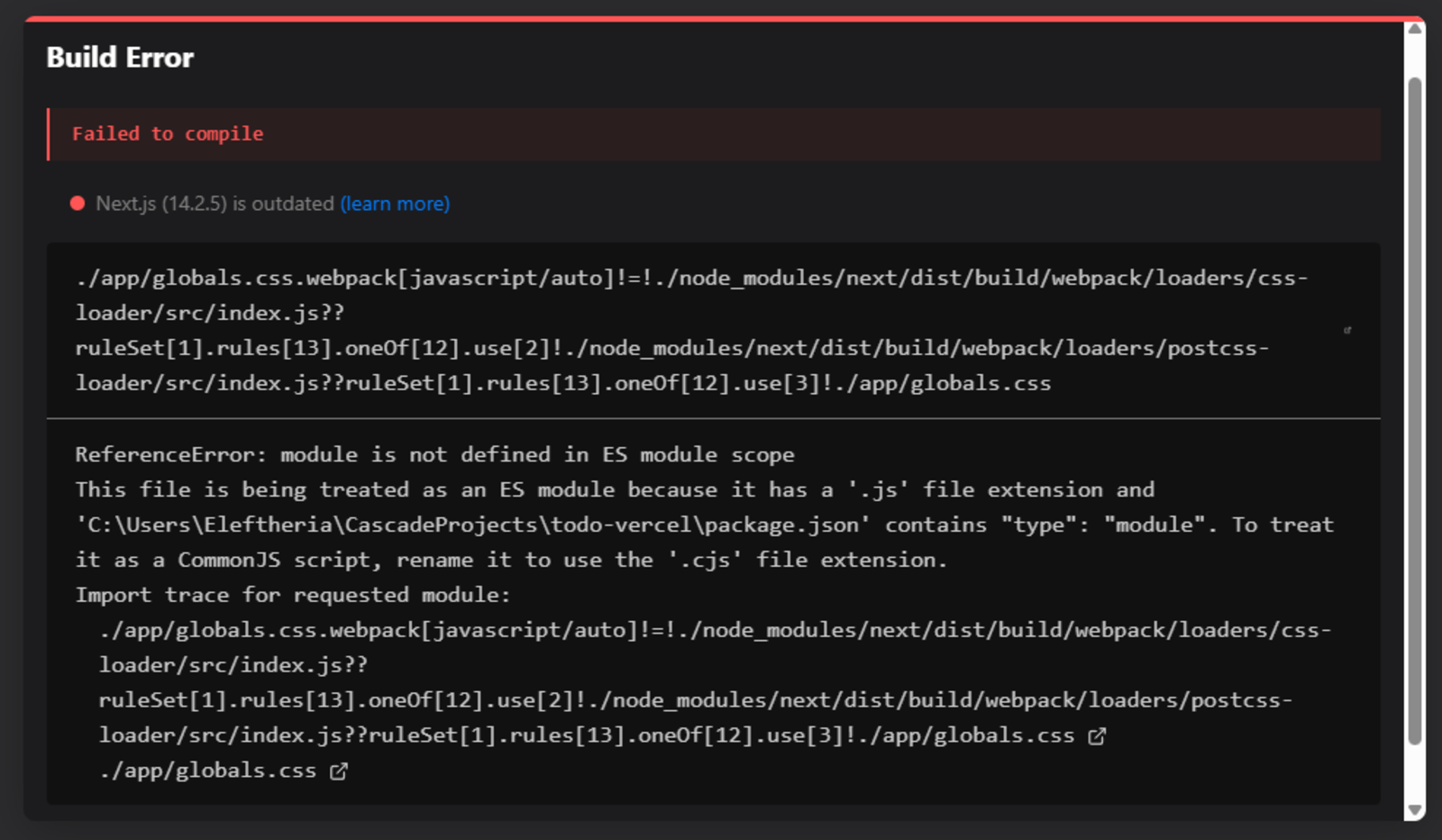

After the first prompt, the build initially failed (outdated Next.js, ES-module errors), but Windsurf automatically offered to send the console logs back to the IDE for repair.

One click later, the issues were fixed and the app ran.

Add filters → Similarly to Codex, Windsurf completed this step automatically. “Active”, “Completed” and “All” tasks tabs were there from the first prompt.

Subsequent tests showed:

Break something → When I deliberately deleted some code, and asked Windsurf to fix any bugs, it took some time (in contrast to when it had to add features that did it very quickly...) but it found all of them and suggested ways to test the app locally! After testing it, everything was correct.

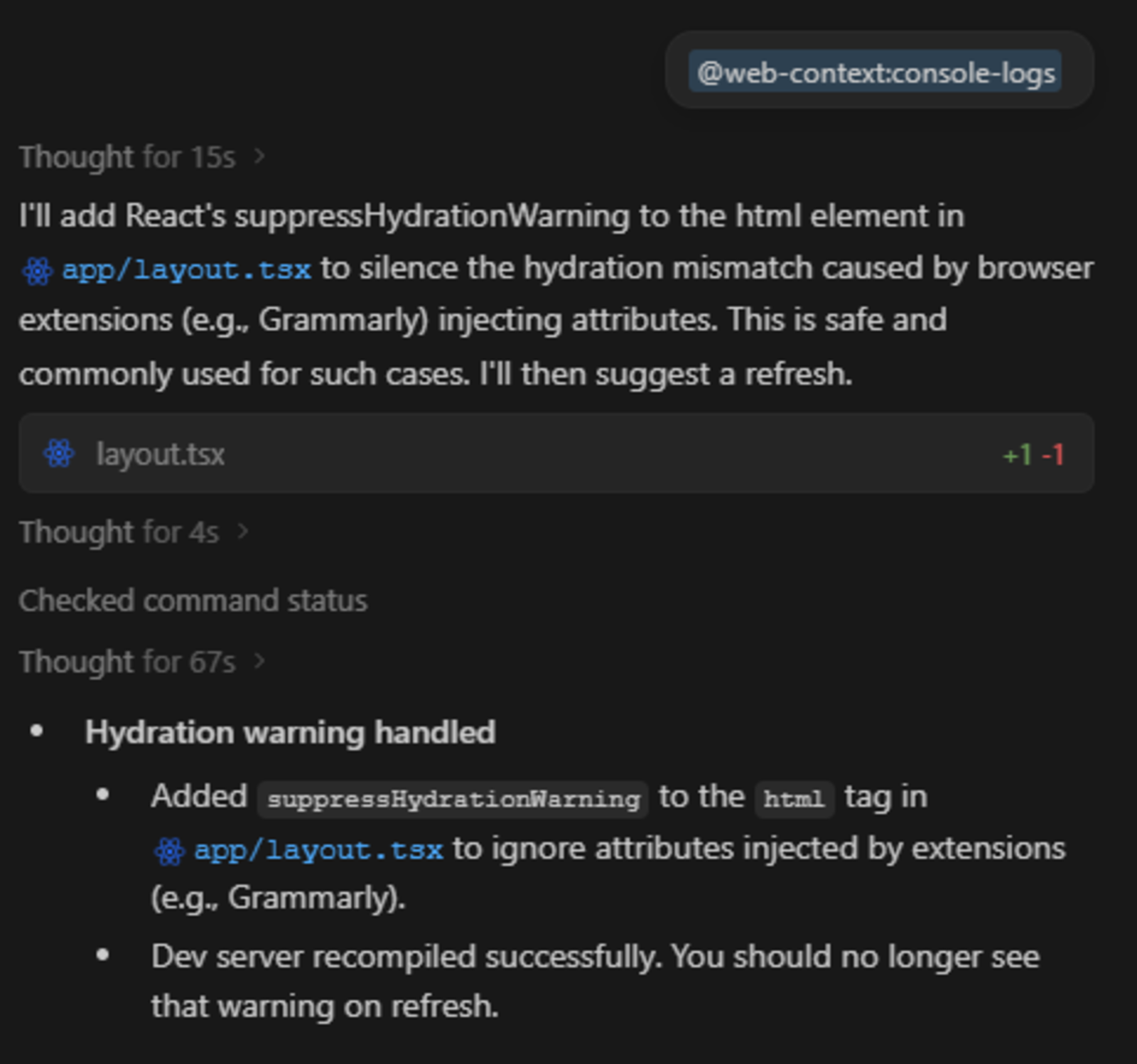

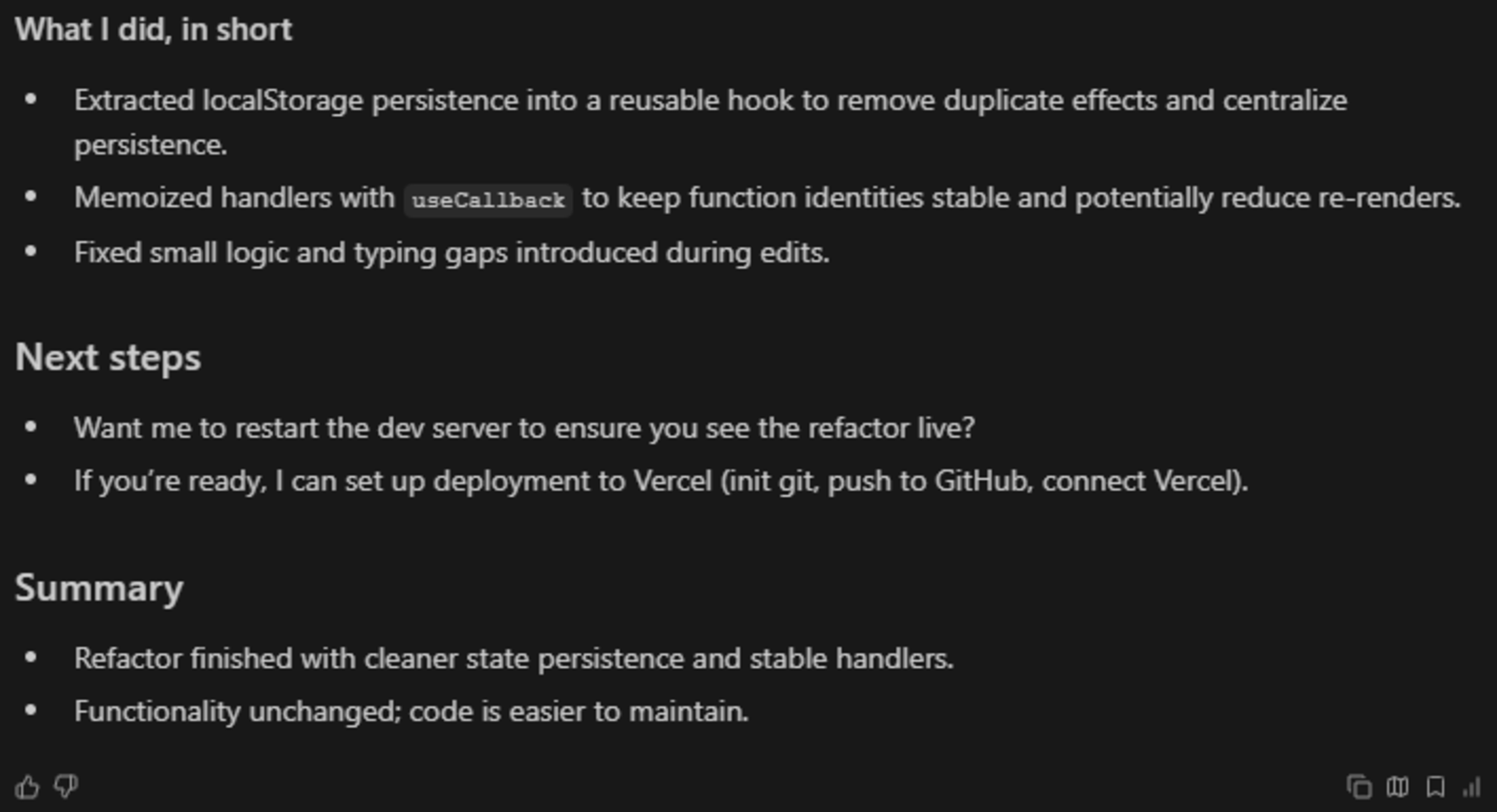

Refactor → Next task was to ask for a refactor and explain what it did. It took a couple of minutes but in the end it made a few changes and asked me if I was "accepting" them, I clicked “yes”.

When I tested it, it seemed like it was running fine but the console showed some errors. I copy-pasted them into the IDE and after a few seconds it fixed them and this time it ran smoothly.

CSV Export → I pasted the same prompt as in Codex and the new functionality worked flawlessly. You can see the final result in the image below.

Result: smooth local loop, ideal for individual developers who value an autonomous IDE that handles builds and fixes interactively. What I noticed was that in the beginning it was working faster, then the more I was prompting it the more time it needed to “think”. I used the GTP-5 model.

Repo: todo-windsurf on GitHub

Cosine

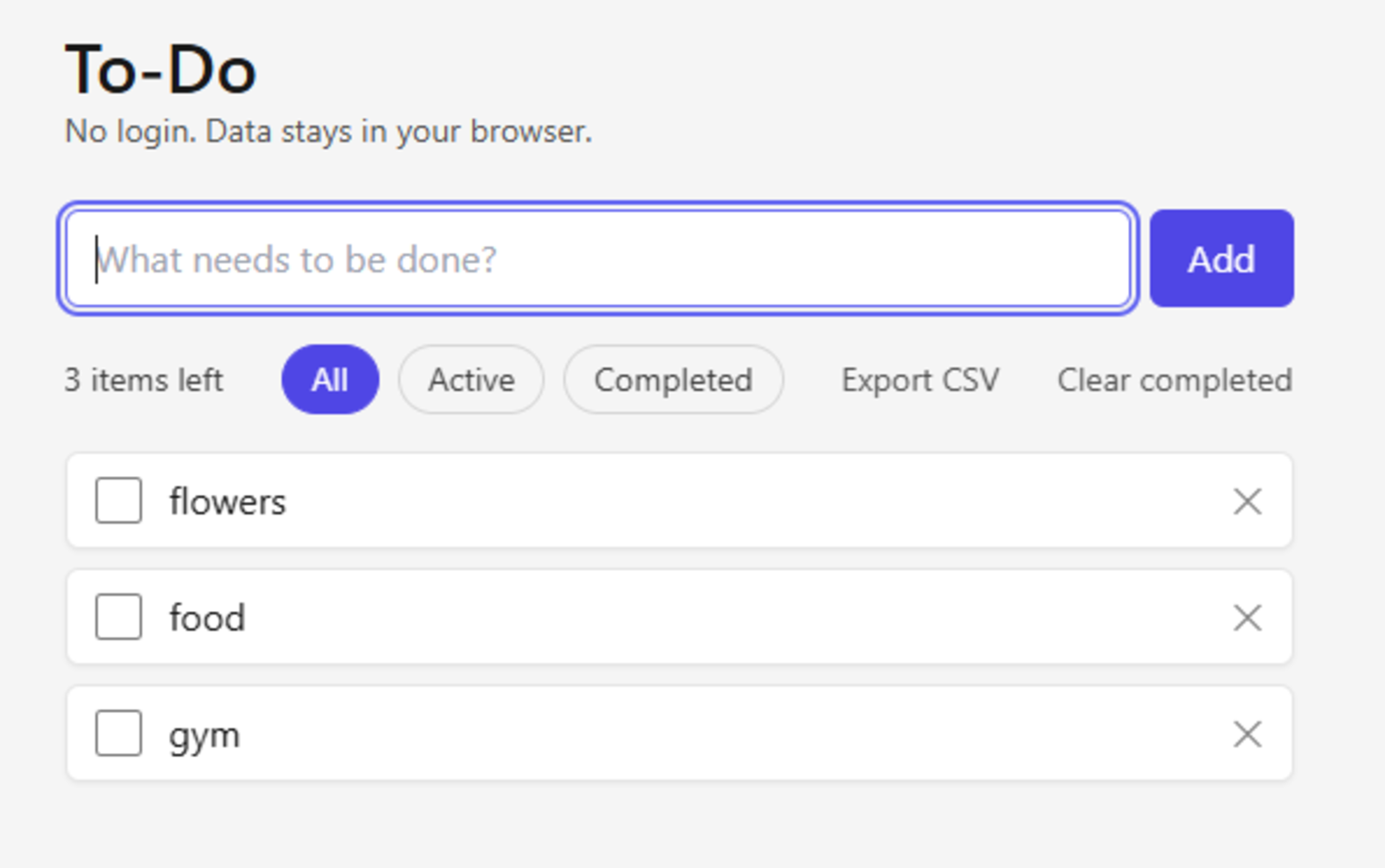

Cosine on VS Code automatically detected the CLI, streamed diffs into the “✦ Cosine Changes” panel, and displayed live previews.More specifically, with the prompt: “Create me a to-do app with React. No login is needed. I want to run it on Vercel or localhost.” it gave me a complete CRUD (add, edit, delete, cancel) app and a concise README with run instructions.

Follow-up prompts matched the others:

Add filters → It delivered them with the 1st prompt. Codex and Windsurf didn’t have the edit and cancel functionalities.

Break something → Although I removed a lot of variables, and functions it found and fixed all the bugs quickly.

Refactor → After asking for a refactor and explanations, it produced readable diffs and explanations inline.

CSV Export → It delivered the feature exactly as I asked, and after testing everything was working correctly. Below, you can see an image of the final result.

Result: As fast as Windsurf, but with full transparency, every change is reviewable and auditable inside the repo.

Repo: todo-cosine-vs on GitHub

Scenario 2 – Extending an existing project: Music Analyzer Demo

I found an open source project and cloned it. In each platform I asked:

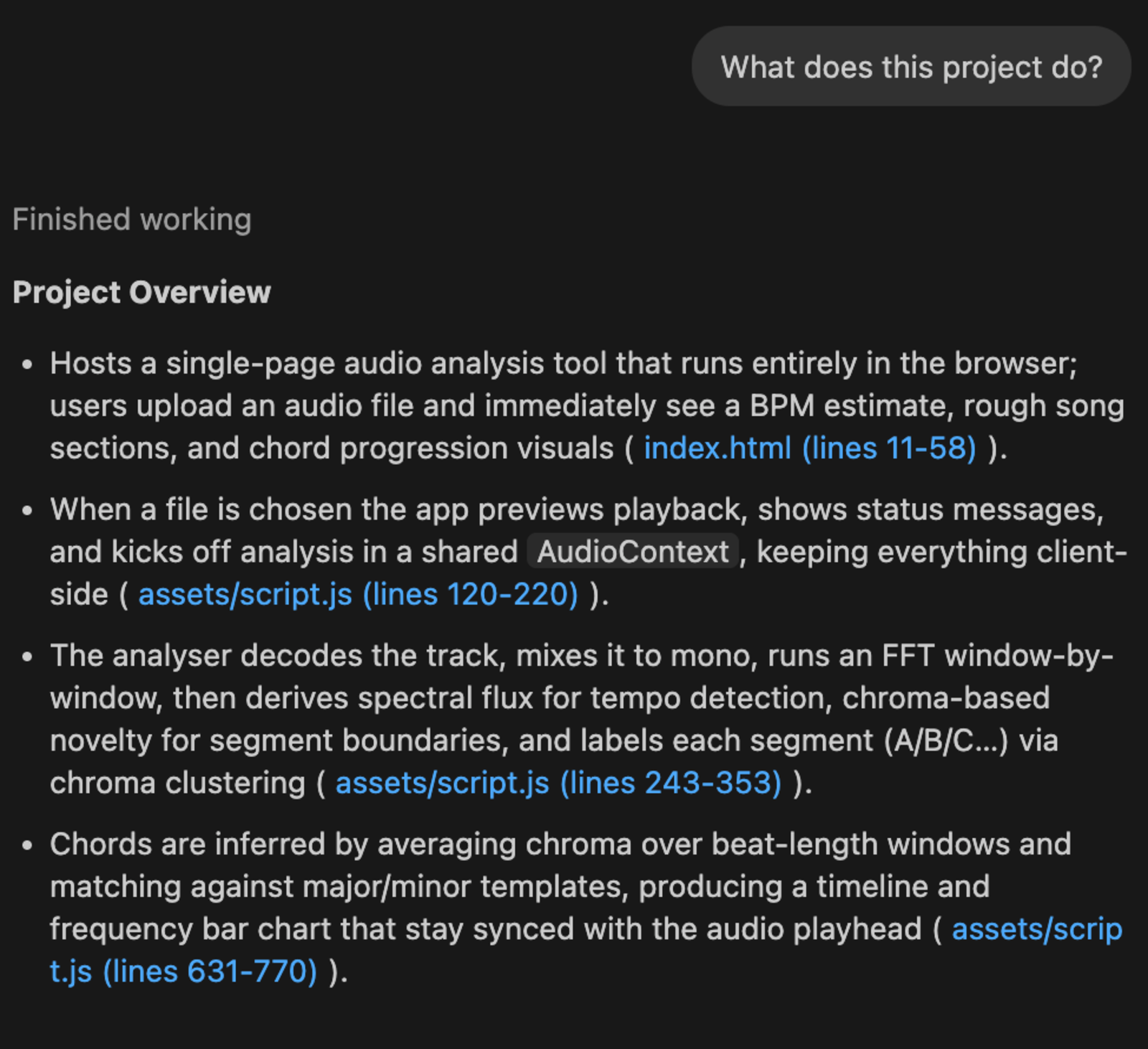

Prompt 1: “What does this project do?”

Prompt 2: “What features shall I add?”

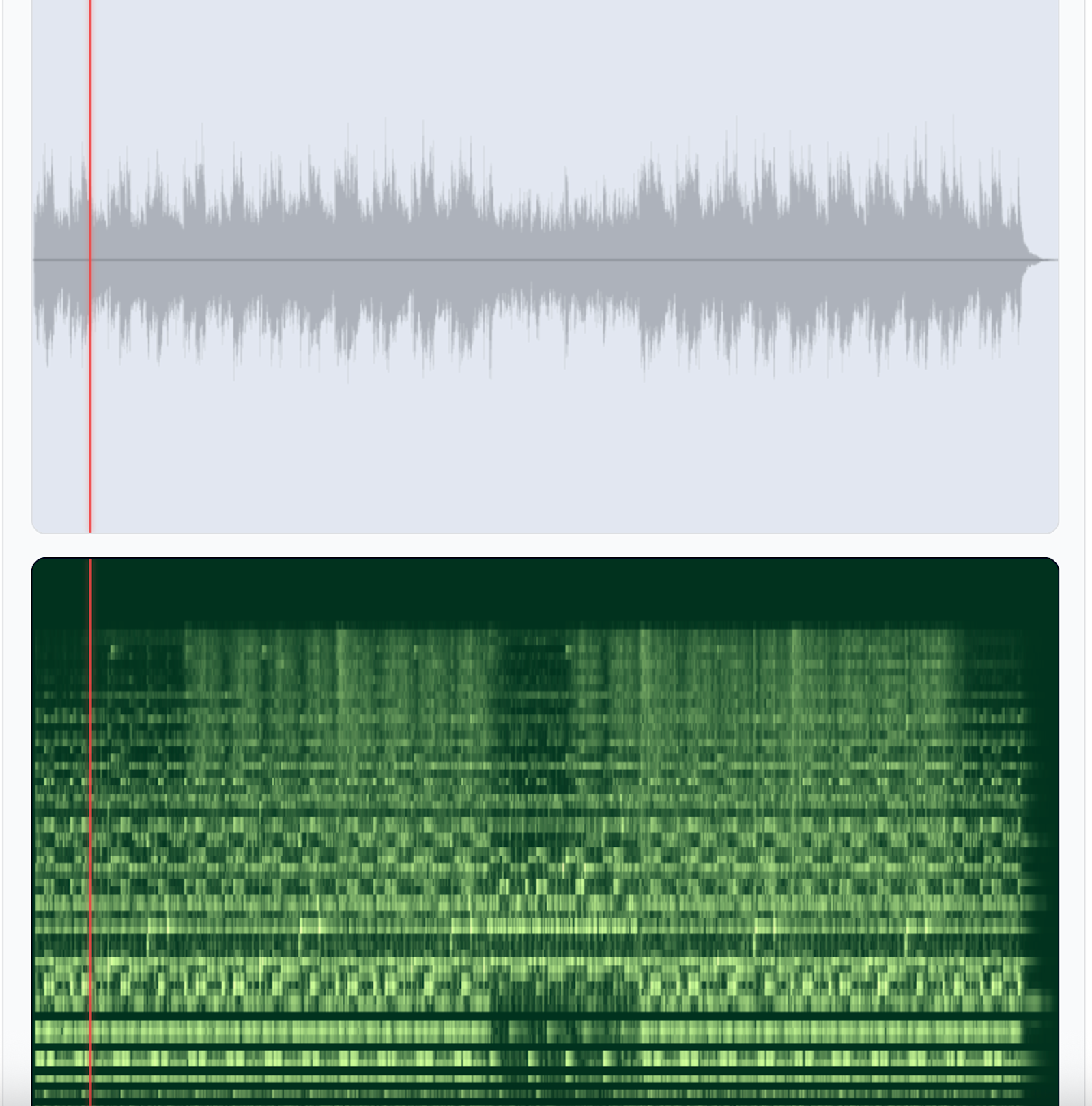

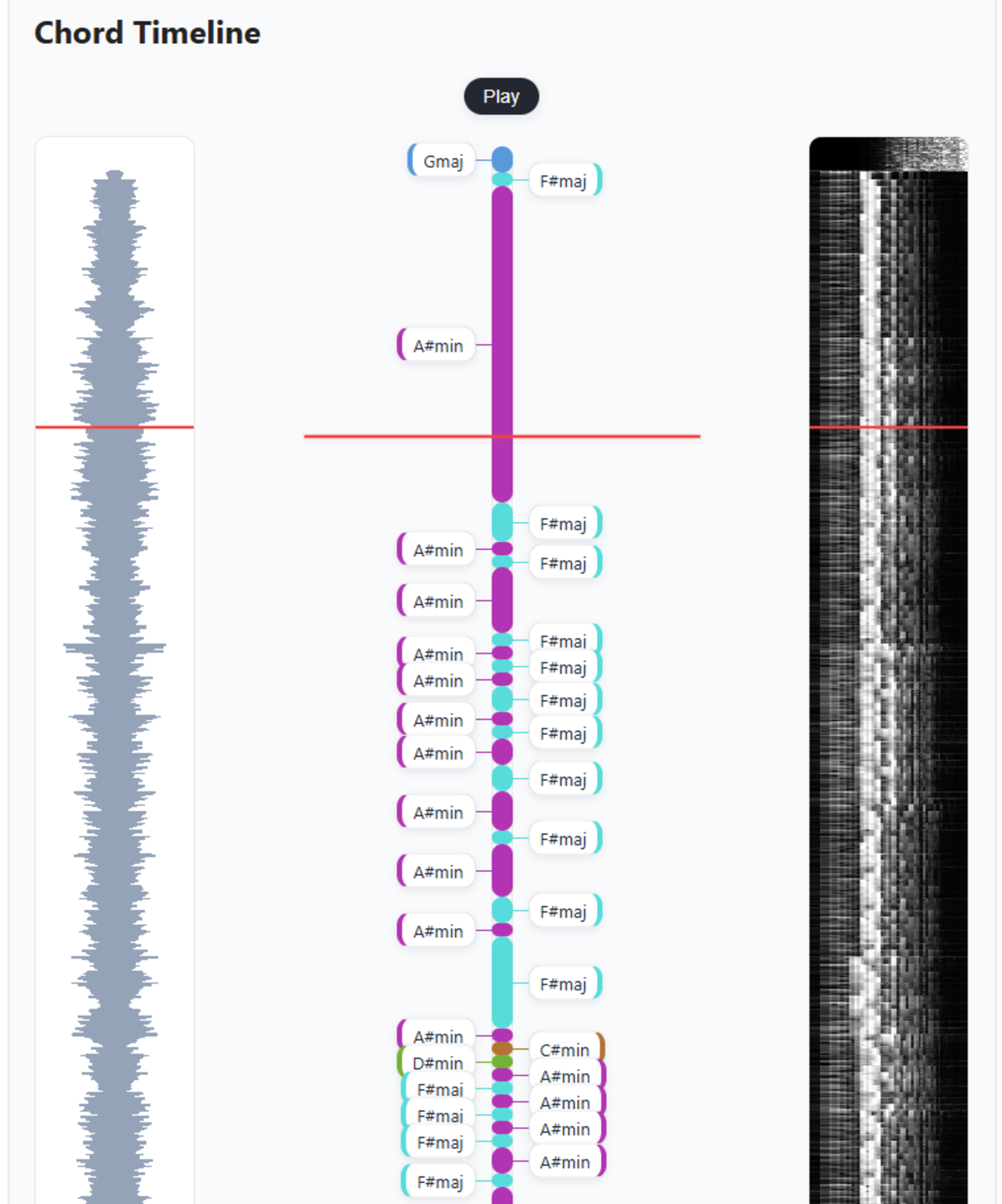

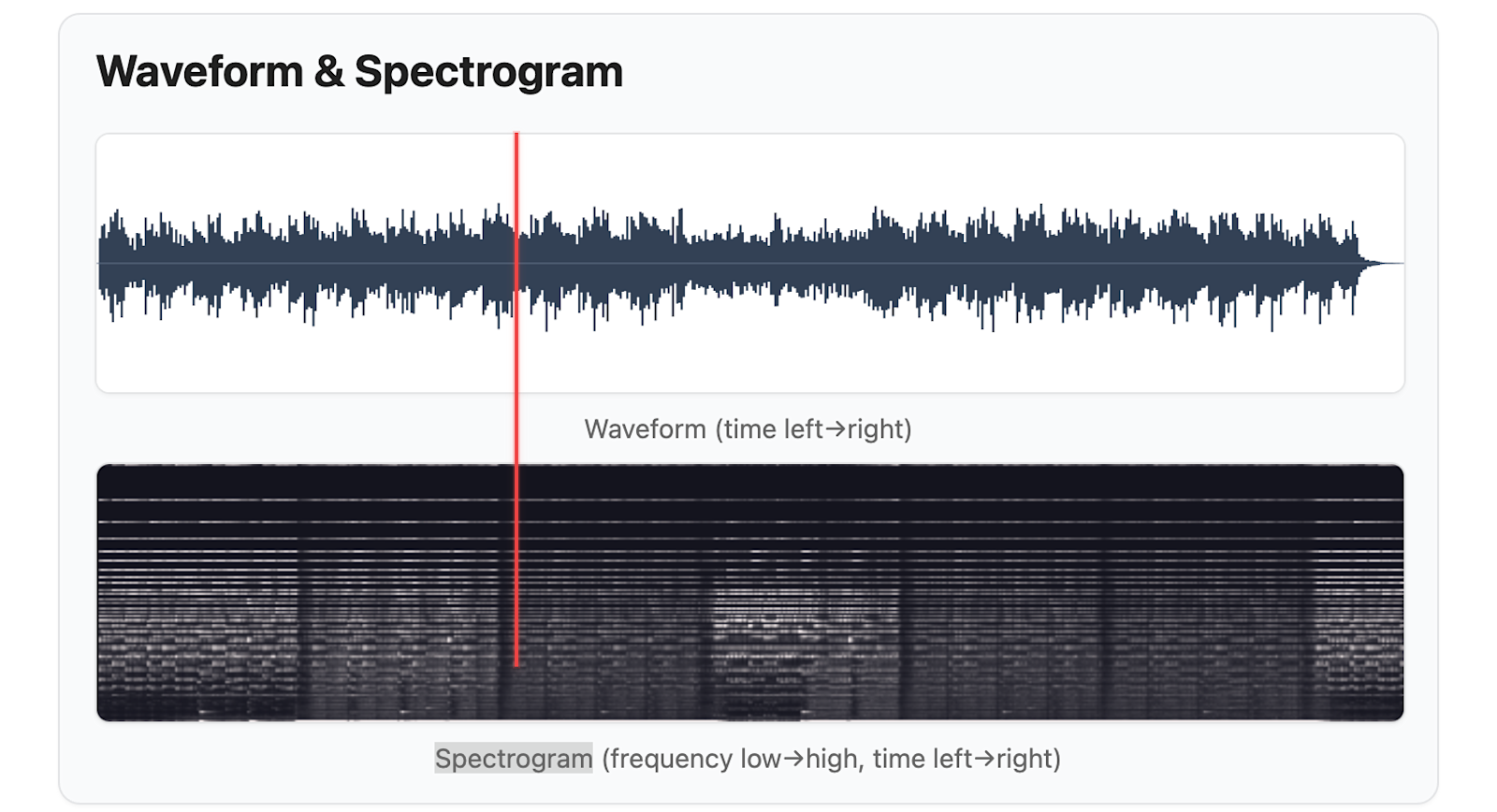

Prompt 3: “Add visual overlays like waveform or spectrogram alongside the court timeline to help correlate analysis with the audio itself”

Codex

With the first prompt it produced a brief summary but no run instructions. Nevertheless, I liked the style of its explanation with the file and the lines mentioned.

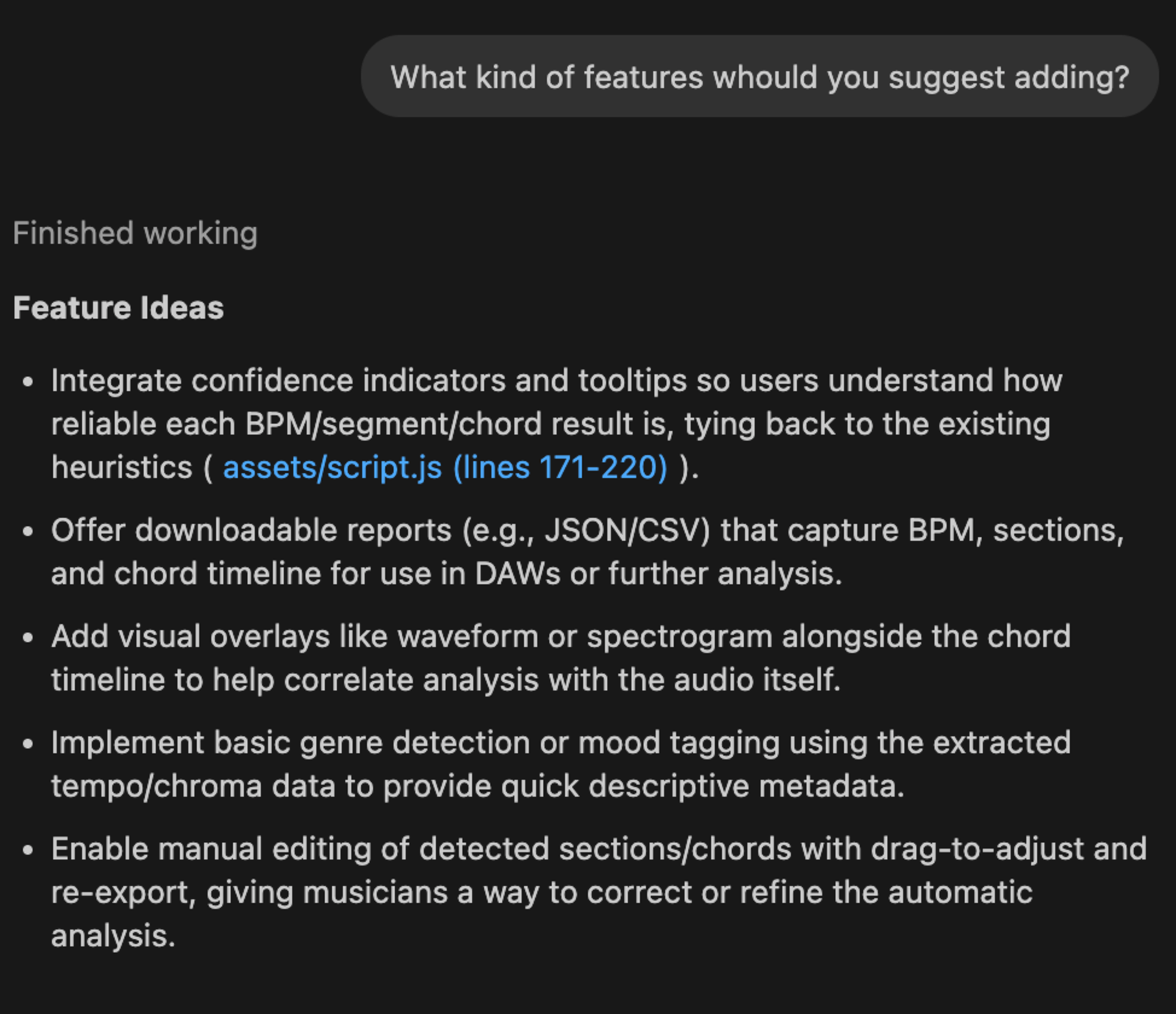

The second prompt also suggested a few features, but in comparison with the other 2 tools, it suggested way less. You can check them in the following image:

Finally, I picked the third suggested feature, and prompted “Add visual overlays like waveform or spectrogram…” it implemented the feature correctly after ~3 minutes. Below is the result of the visual overlay.

Result: Effective but minimal context, suited for users comfortable verifying behavior manually.

Windsurf

Windsurf gave me a nice explanation and how to run it before coding (Codex didn't mention that).

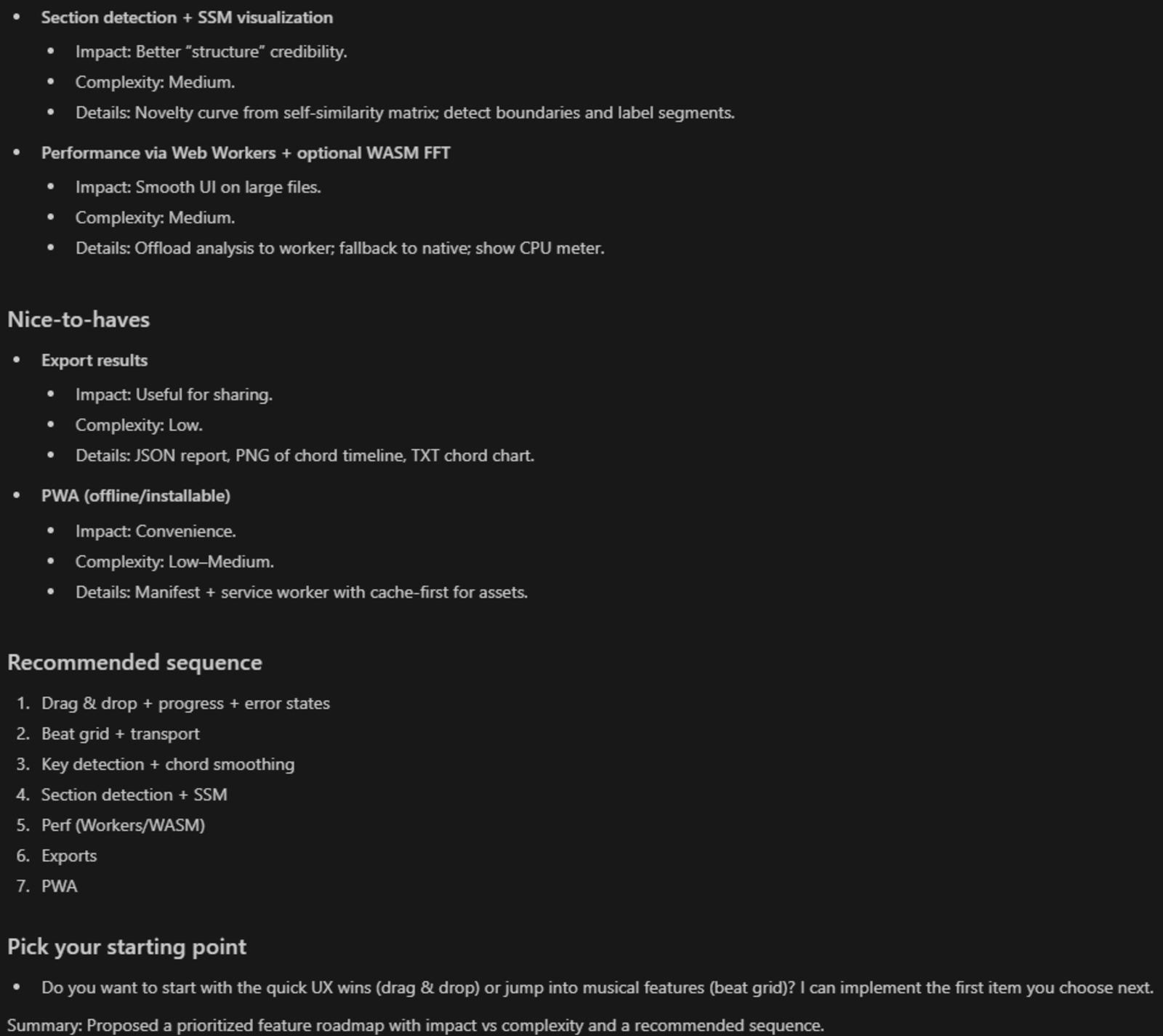

When asked “What features shall I add?” it generated an extensive list and even prioritized recommendations, something that Codex didn’t do.

I prompt it to add the same overlay as seen in Codex. In total it took 1–2 minutes. Windsurf asked some questions, like where I wanted the overlays to be placed. Below you can see the final result.

Result: Excellent proactive suggestions, more context than Codex, smooth execution. The execution was also smoother in comparison to the 1st scenario.

Cosine

With the first prompt Cosine not only explained the project but also inserted the explanation into script.js for future maintainers (something that the other platforms didn’t do).

With the second prompt: Its feature suggestions were rich and automatically grouped by priority. It suggested the most features.

When instructed to implement the same overlay, it did so cleanly, asked if further iteration was needed, and committed the diff through the VS Code extension. You can see the result below:

Result: Highest context awareness, balanced automation with human-in-the-loop review.

Repo: music-analyser-demo on GitHub

Conclusion

Each tool delivered value in its own lane:

Codex: dependable code generator, minimal hand-holding.

Windsurf: fast and strong self-healing.

Cosine: most structured workflow with diffs, rationale, and auditability baked in.

Across both parts, the pattern is consistent: AI coding tools are converging on speed, but clarity and collaboration still decide who wins in the editor. Codex writes, Windsurf reacts, and Cosine orchestrates, turning AI edits into traceable, review-ready changes.

@BatsouElef

@BatsouElef