Deploy Cosine on your own infrastructure, fully air-gapped.

Kubernetes-native deployment. And underpinned by open-source models

post-trained for maximum accuracy.

Kubernetes-native deployment on your own infrastructure.

Underpinned by open-source models post-trained for maximum accuracy.

Underpinned by open-source models post-trained for maximum accuracy.

Post-training

How we deliver state-of-the-art accuracy, even on-premise

For on-premise deployments, Cosine applies reinforcement learning to

open-source LLMs using a dataset of real-world software engineering

workflows.

By capturing reasoning traces from public code repositories, we train models to think like human developers. This delivers significantly higher coding accuracy per GPU, whatever the base model. For example, in 2025 we post-trained OpenAI's open-source gpt-oss-120B model for a global investment bank and delivered a 20% uplift in performance.

We can also post-train a model on a specific coding language (e.g. COBOL, Fortran, Verilog) or on your company’s specific coding conventions and repos, to deliver an even higher and more specialised level of accuracy.

By capturing reasoning traces from public code repositories, we train models to think like human developers. This delivers significantly higher coding accuracy per GPU, whatever the base model. For example, in 2025 we post-trained OpenAI's open-source gpt-oss-120B model for a global investment bank and delivered a 20% uplift in performance.

We can also post-train a model on a specific coding language (e.g. COBOL, Fortran, Verilog) or on your company’s specific coding conventions and repos, to deliver an even higher and more specialised level of accuracy.

The steps we take

We help you decide what hardware to deploy

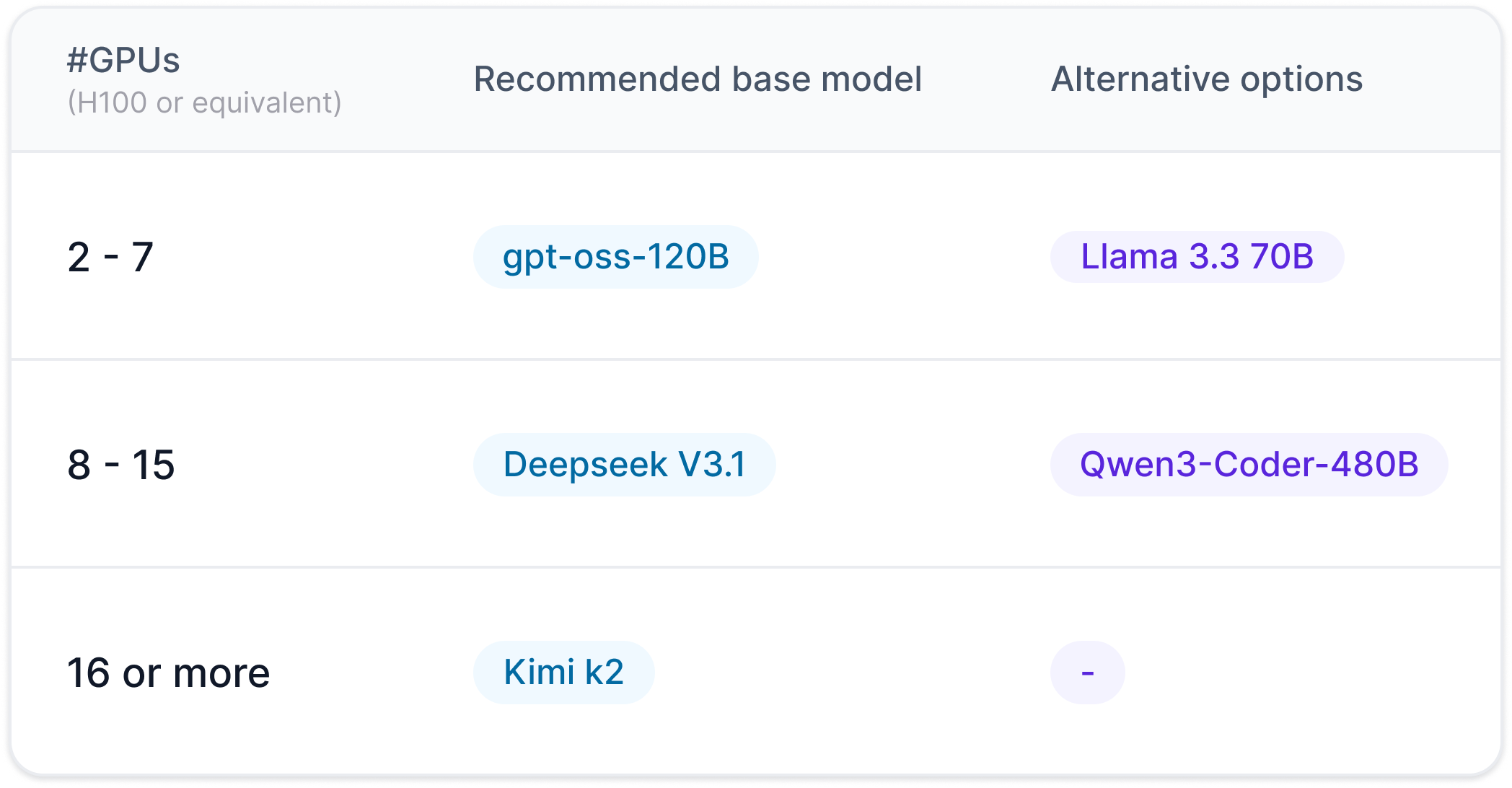

We’ll help you decide how many GPUs to allocate and how to balance them between inference and training workloads. Under-provisioning limits your accuracy and throughput; over-provisioning ties up capital and power.

We help you choose an open-weight model

Instead of making an API call to GPT-5 or Claude, you’ll host an open-weights model such as Llama 3, Mistral, Gemma, DeepSeek, or Phi-3. Each has distinct strengths, licenses, and compute footprints, and selecting one that fits your stack and GPU budget is critical.

We post-train to suit your needs

To reach enterprise-grade precision, many organisations choose to train their chosen model on internal data, domain-specific code, or synthetic analogues. The more specialised and critical the work, the more this matters. This is how you turn a capable base model into a high-fidelity engineering assistant.

Kubernetes-native for speedy onboarding

To make integration simple and fast, we package our services for easy

deployment on Kubernetes. Cosine delivers production-ready Docker images, Helm charts, and configuration

templates to streamline setup and orchestration across your clusters.

Our engineers work with your platform team to validate compatibility with your existing CI/CD pipelines, manage secure image delivery, and optimize runtime performance for both training and inference workloads. This approach allows you to maintain full control over your environment while benefiting from a proven, containerized deployment workflow tailored for enterprise scale.

Our engineers work with your platform team to validate compatibility with your existing CI/CD pipelines, manage secure image delivery, and optimize runtime performance for both training and inference workloads. This approach allows you to maintain full control over your environment while benefiting from a proven, containerized deployment workflow tailored for enterprise scale.

Enterprise-level service to support your deployment

We recognize that on-premise deployment is complex. That’s why we offer

full-service support for customers deploying

our agentic AI systems on-premise.

Each engagement begins with a dedicated deployment team that works closely with your technical and security stakeholders to ensure seamless installation, integration, and configuration within your infrastructure.

We conduct weekly check-ins throughout deployment and early production to align on milestones, troubleshoot emerging issues, and share performance insights.

Meanwhile, our engineers provide hands-on guidance for scaling and optimizing post-training workloads, ensuring models remain efficient and compliant with your enterprise standards.

Beyond deployment, our team offers ongoing advisory support, from model evaluation to continuous improvement, so your on-premise deployment remains reliable, secure, and high-performing as your needs evolve.

Each engagement begins with a dedicated deployment team that works closely with your technical and security stakeholders to ensure seamless installation, integration, and configuration within your infrastructure.

We conduct weekly check-ins throughout deployment and early production to align on milestones, troubleshoot emerging issues, and share performance insights.

Meanwhile, our engineers provide hands-on guidance for scaling and optimizing post-training workloads, ensuring models remain efficient and compliant with your enterprise standards.

Beyond deployment, our team offers ongoing advisory support, from model evaluation to continuous improvement, so your on-premise deployment remains reliable, secure, and high-performing as your needs evolve.